- ↔

- →

- It's time for modern CSS to kill the SPA - Jono Alderson

- Two Simple Rules to Fix Code Reviews

- Artisanal Handcrafted Git Repositories | drew's dev blog

- Reflections on OpenAI

- AlphaGolang | A Step-by-Step Go Malware Reversing Methodology for IDA Pro | SentinelOne

- July 26, 2025

-

🔗 r/reverseengineering Can You Crack This Program? (Beginner Reverse Engineering Tutorial) rss

submitted by /u/tucna

[link] [comments] -

🔗 r/reverseengineering Rooting the TP-Link Tapo C200 Rev.5 rss

submitted by /u/g_e_r_h_a_r_d

[link] [comments] -

🔗 r/reverseengineering Trying to control Pi Browser in Android emulator with Frida—anyone pulled off deep automation like this? rss

I’m working on a pretty advanced automation project where I want to fully control the Pi Browser inside an Android Studio emulator using Frida—not just basic clicks, but deep function-level manipulation and real-time code execution.

submitted by /u/LongjumpingBag6270

[link] [comments] -

🔗 uxmal/reko Version 0.12.1 release

A new minor release of Reko is available. The main focus of the release has been to provide better XML documentation, which has been requested by users.

-

XML documentation is now available for all classes in the

Reko.CoreandReko.Decompilerassemblies. In addition the build now treats missing XML documententation on types and type members as compiler errors. This will enforce a higher standard of documentation moving forward. -

The Reko.Decompiler.Runtime Nuget now includes the XML documentation.

-

Support for disassembling and lifting PDP-1 machine code was added. I'm fairly confident that Reko is currently the only decompiler supporting this venerable architecture!

-

Added support for OKI NX8/200 and NX8/500 architectures.

-

Added support for Intel's APX instruction prefix.

-

General code cleanup and fixes. Removed many classes that have become obsolete over the years.

-

@smx-smx provided a fix for a crash in the ELF loader when loading ELF files with unknown ABIs.

-

Fixed the command line driver to better handle command line arguments when disassembling raw images.

-

More work on ScannerV2 to handle delay slots correctly on Sparc, Mips, and PA-RISC architectures.

-

Added support for loading little-endian 64-bit PowerPC ELF images.

-

Eliminated the

OutArgumentStorageclass. This class was confusing and hard to use. It has been replaced by the newOutputscollection onFunctionTypewhich tracks

the registers used for returning values from procedure calls. -

The new method

ITestGenerationService.GenerateUnitTestFromProcedurecan now generate compileable C# code when crashes occur. This will greatly improve handling user-reported problems.

Special thanks to @smx-smx for troubleshooting various ELF loading issues.

-

-

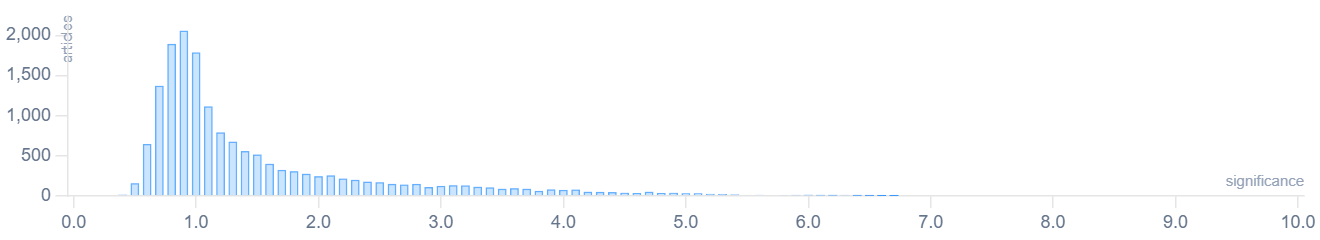

🔗 News Minimalist 🐢 China to lead new global AI organization + 8 more stories rss

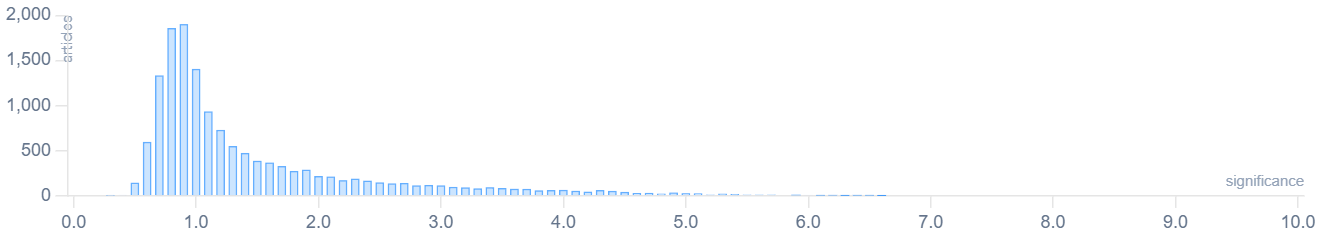

In the last 2 days ChatGPT read 56909 top news stories. After removing previously covered events, there are 9 articles with a significance score over 5.9.

[6.2] China to lead global AI development organization —japantimes.co.jp(+11)

China will lead the formation of an international organization for joint AI development, aiming to prevent a few nations or companies from monopolizing the technology.

Premier Li Qiang stated that global cooperation is necessary to address AI's risks, such as job displacement and economic disruption, during the World Artificial Intelligence Conference in Shanghai.

This initiative follows U.S. restrictions on China's tech sector, including chip exports, and underscores Beijing's commitment to advancing its AI ambitions despite semiconductor shortages.

[6.6] EU regulator approves injectable HIV prevention drug —apnews.com(+16)

The European Medicines Agency recommended authorizing a twice-yearly injectable drug to prevent HIV, potentially ending its transmission.

The drug, lenacapavir, was found highly effective and of major public health interest. It is already used to treat HIV and studies showed it was nearly 100% effective in preventing transmission.

This injectable offers longer protection than existing options, but concerns exist about its widespread availability, though Gilead plans generic versions for many low-income countries.

Highly covered news with significance over 5.5

[6.3] Australia, UK sign submarine pact — smh.com.au (+17)

[6.2] France's top court says arrest warrant for Assad is invalid, new one can be issued — reuters.com (+16)

[5.8] Trump orders AI to avoid "woke" content — apnews.com (+13)

[5.8] France recognizes Palestinian statehood, first G7 nation to do so — dw.com (+317)

[5.7] Google’s new “Web Guide” will use AI to organize your search results — arstechnica.com (+17)

[5.5] Trump's order targets homeless encampments — apnews.com (+37)

[5.5] UK's new Online Safety Act to protect children from ‘toxic algorithms’ — independent.co.uk (+28)

Thanks for reading!

— Vadim

You can create your own personal newsletter like this with premium.

-

🔗 @HexRaysSA@infosec.exchange 📢 Calling all CTF (Capture the Flag) Organizers and Competitors! mastodon

📢 Calling all CTF (Capture the Flag) Organizers and Competitors!

Hex-Rays is now accepting applications for our CTF Sponsorship Program—and we want to hear from you.

Each year, we sponsor:

• 4 CTF events (from global competitions to student-ran challenges)

• 4 teams (3 top-tier squads and a crew of rising stars)Perks include FREE IDA licenses, exclusive swag, and travel support. For more information, please visit:

https://hex-rays.com/blog/ctf-sponsorship-program -

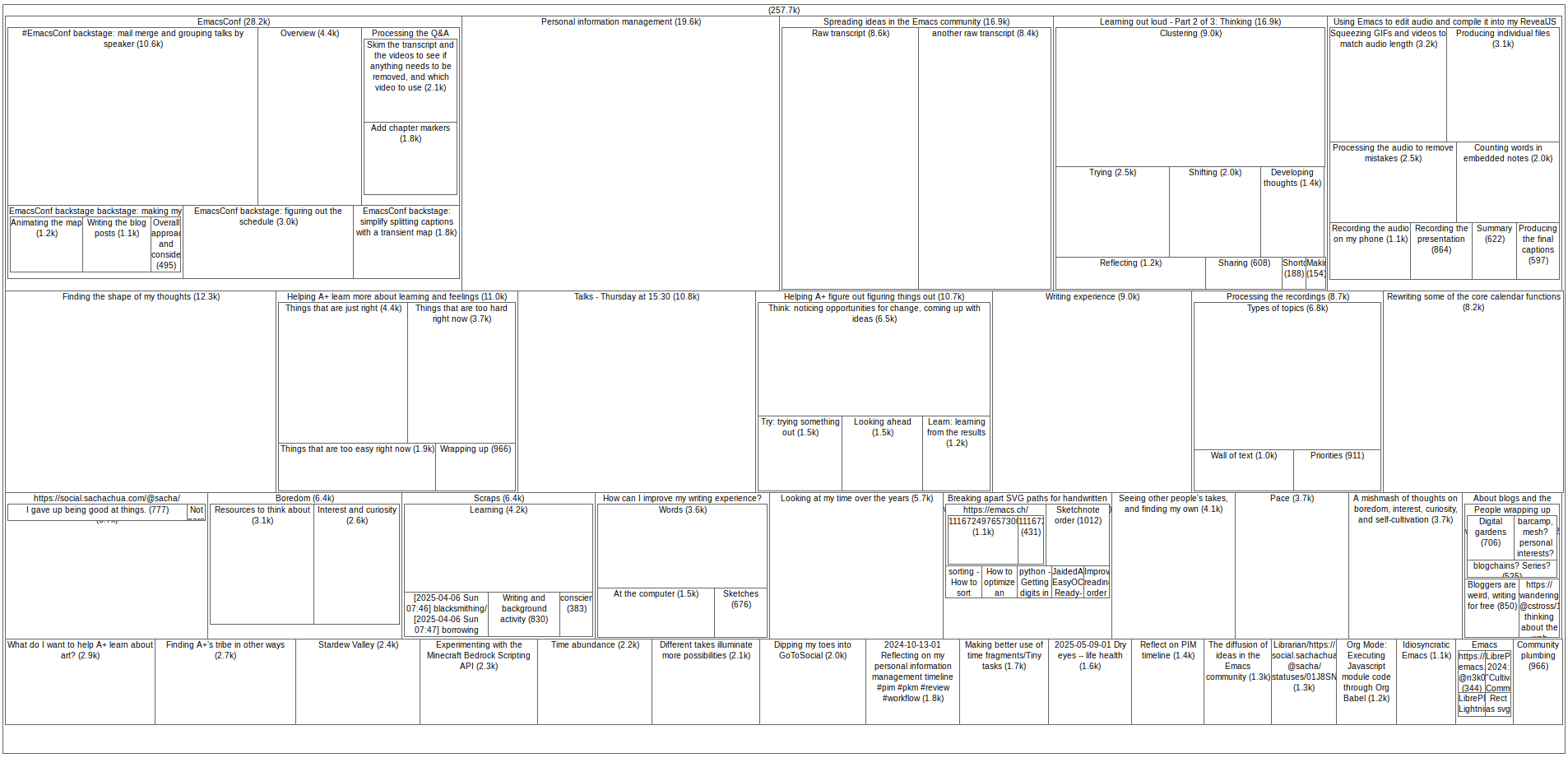

🔗 sacha chua :: living an awesome life My Emacs writing experience rss

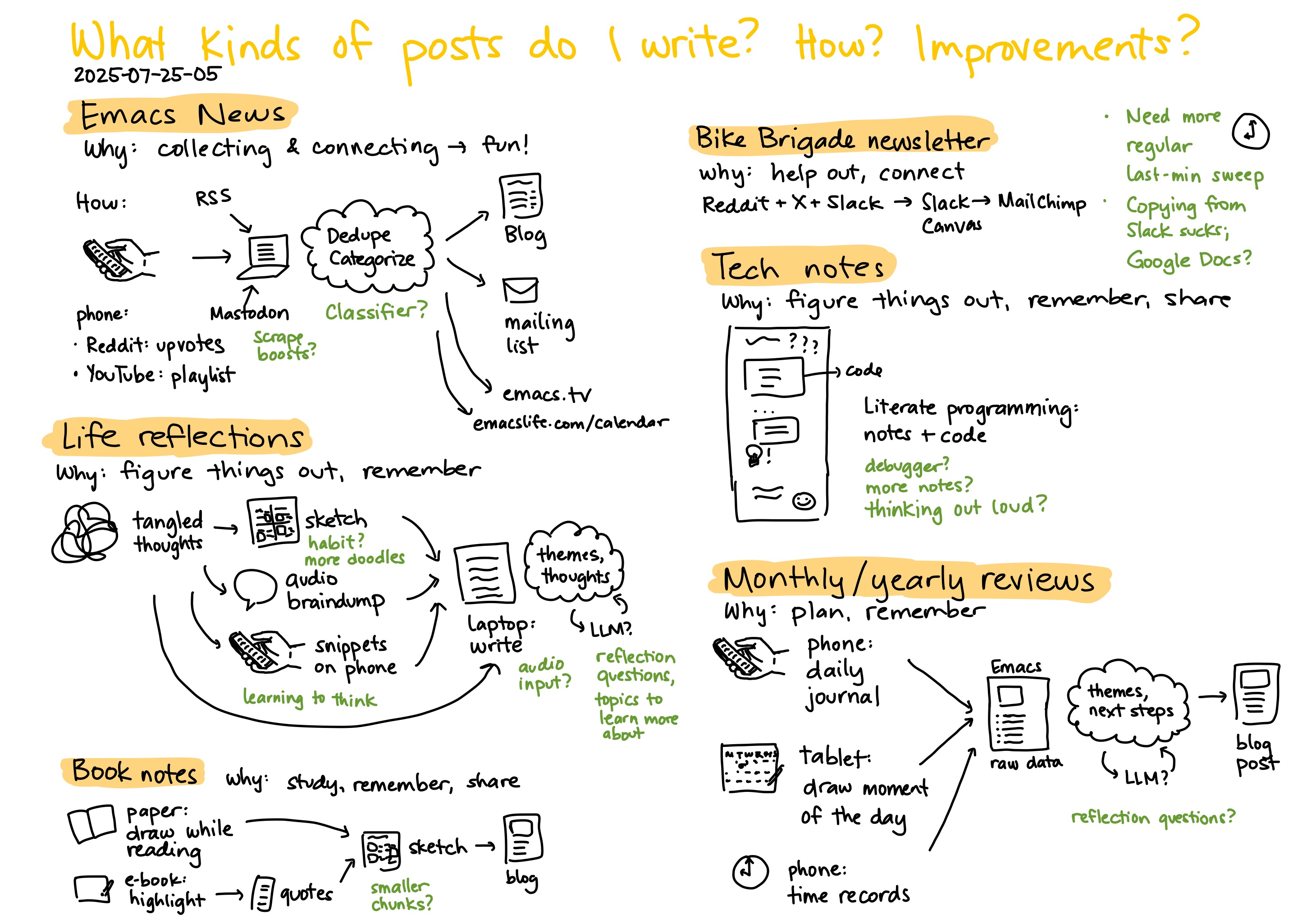

I've been enjoying reading people's responses to the Emacs Carnival July theme of writing experience. I know I don't need complicated tools to write. People can write in composition notebooks and on typewriters. But I have fun learning more about the Emacs text editor and tweaking it to support me. Writing is one of the ways I think, and I want to think better. I'll start with the kinds of things I write in my public and private notes, and then I'll think about Emacs specifically.

Types of notes

Text from sketchWhat kinds of posts do I write? How? Improvements?

2025-07-25-05

- Emacs News

- why: collecting & connecting → fun!

- how:

- phone: Reddit: upvotes

- YouTube: playlist

- RSS

- Mastodon: Scrape boosts?

- Dedupe, categorize: classifier?

- Blog

- Mailing list

- emacs.tv

- emacslife.com/calendar

- Bike Brigade newsletter

- why: help out, connect

- Reddit + X + Slack -> Slack canvas -> MailChimp

- Need more regular last-min sweep

- Copying from Slack sucks; Google Docs?

- Tech notes

- Why: figure things out, remember, share

- code

- literate programming: notes + code

- debugger?

- more notes?

- thinking out loud?

- Life reflections

- Why: figure things out, remember

- tangled thoughts

- sketch: habit? more doodles

- audio braindump

- snippets on phone

- learning to think

- laptop: write

- audio input?

- themes, thoughts

- LLM? reflection questions, topics to learn more about

- Book notes

- Why: study, remember, share

- paper: draw while reading

- e-book: highlight

- quotes

- sketch

- smaller chunks?

- blog

- Monthly/yearly reviews

- Why: plan, remember

- phone: daily journal

- tablet: draw moment of the day

- phone: time records

- Emacs: raw data

- themes, next steps: LLM? reflection questions?

- blog post

Emacs News

I put together a weekly list of categorized links about the interesting ways people use Emacs. This takes me about an hour or two each week. I enjoy collecting all these little examples of people's curiosity and ingenuity. Organizing the links into a list helps people find things they might be interested in and connect with other people.

I start by skimming r/emacs and r/orgmode on my phone, upvoting posts that I want to include. I also search YouTube and add videos to an Emacs News playlist. I review aggregated posts from Planet Emacslife. I have an Emacs Lisp function that collects all the data and formats them as a list, with all the items at the same level.

For Mastodon, I check #emacs search results from a few different servers. I have a keyboard shortcut that boosts a post and captures the text to an Org Mode file, and then I have another function that prompts me to summarize toots, defaulting to the title of the first link. I have more functions that help me detect duplicates and categorize links. I use ox-11ty to export the post to my blog, which uses the Eleventy static site generator. I also use emacstv.el to add the videos to the Org file I use for emacs.tv.

Some ways to improve this:

- I probably have enough data that it might be interesting to learn how to write a classifier. On the other hand, regular expression matches on the titles get most of them correctly, so that might be sufficient.

- YouTube videos are a little annoying to go through because of interface limitations and unrelated or low-effort videos. I can probably figure out something that checks the RSS feeds of various channels.

Bike Brigade newsletter

I also put together a weekly newsletter for Bike Brigade, which coordinates volunteer cyclists to deliver food bank hampers and other essentials. Writing this mostly involves collecting ideas from a number of social media feeds as well as the other volunteers in the community, putting together a draft, and then copying it over to Mailchimp. I'm still figuring out my timing and workflows so that I can stay on top of last-minute requests coming in from people on Slack, and so that I can repurpose newsletter items as updates in the Facebook group or maybe even a blog. If I set aside some regular time to work on things, like a Sunday morning sweep for last-minute requests, that might make it easier to work with other people.

Tech notes

I like coding, and I come up with lots of ideas as I use my computer. I enjoy figuring out workflow tweaks like opening lots of URLs in a region or transforming HTML clipboard contents. My Org files have accumulated quite a few. My main limiting factor here is actually sitting down to make those things happen. Fortunately, I have recently discovered that it's possible for me to spend an hour or two a day playing Stardew Valley, so I can swap some of that time for Emacs tweaking instead. Coding doesn't handle interruptions as well as playing does, but taking notes along the way might be able to help with that. I can jump to the section of my Org file with the ideas I wanted to save for more focus time, pick something from that screen, and get right to it.

Other things that might help me do this more effectively would be:

- getting better at using my tools (debugger, documentation, completion, etc.),

- taking the opportunity to plug in an external monitor, and

- using my non-computer time to mull over the ideas so that I can hit the ground running.

I like taking notes at virtual meetups. I usually do this with Etherpad so that other people can contribute to the notes too. I don't have a real-time read-write Emacs interface to this yet (that would be way cool!), but I do have some functions for working with the Etherpad text.

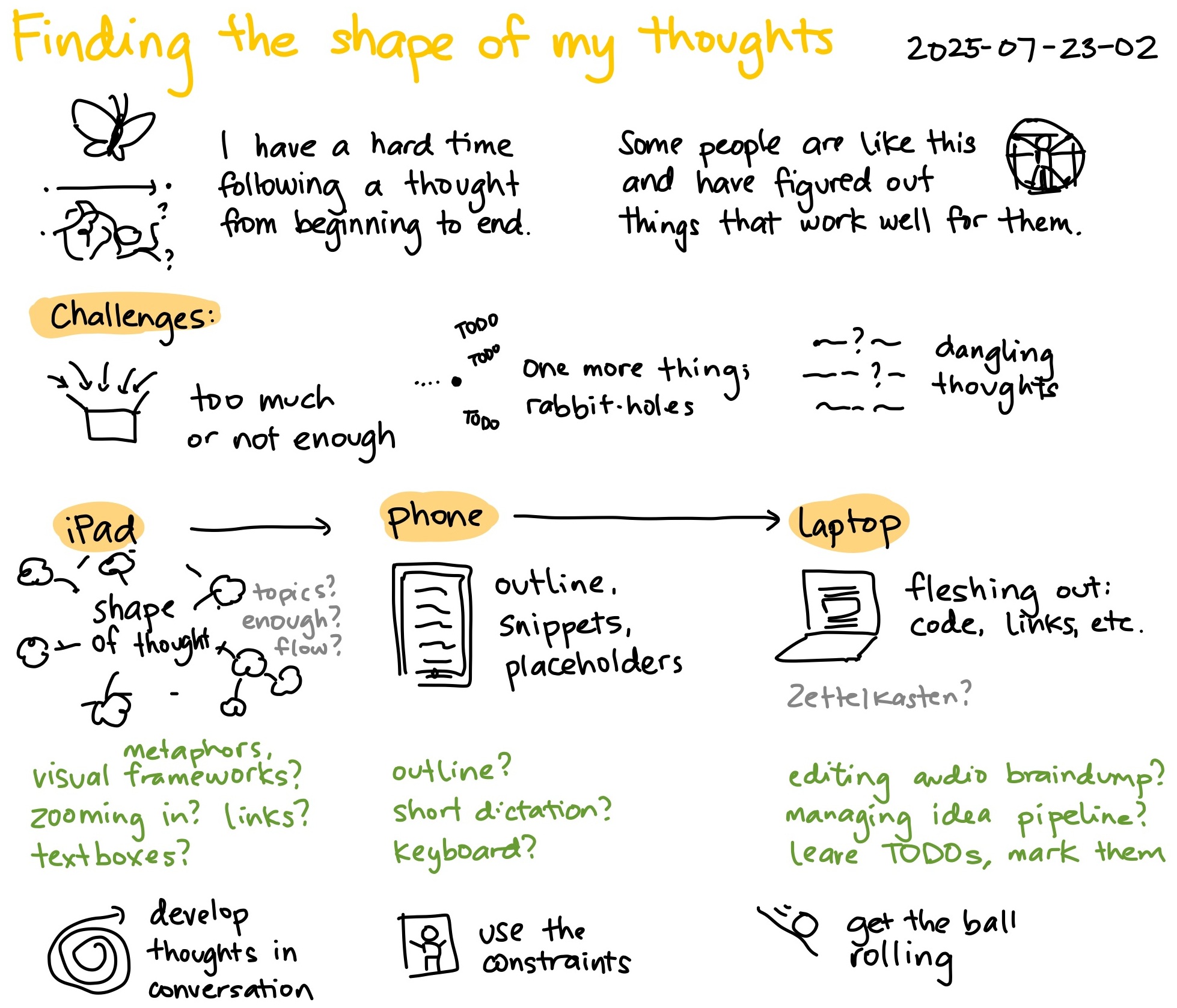

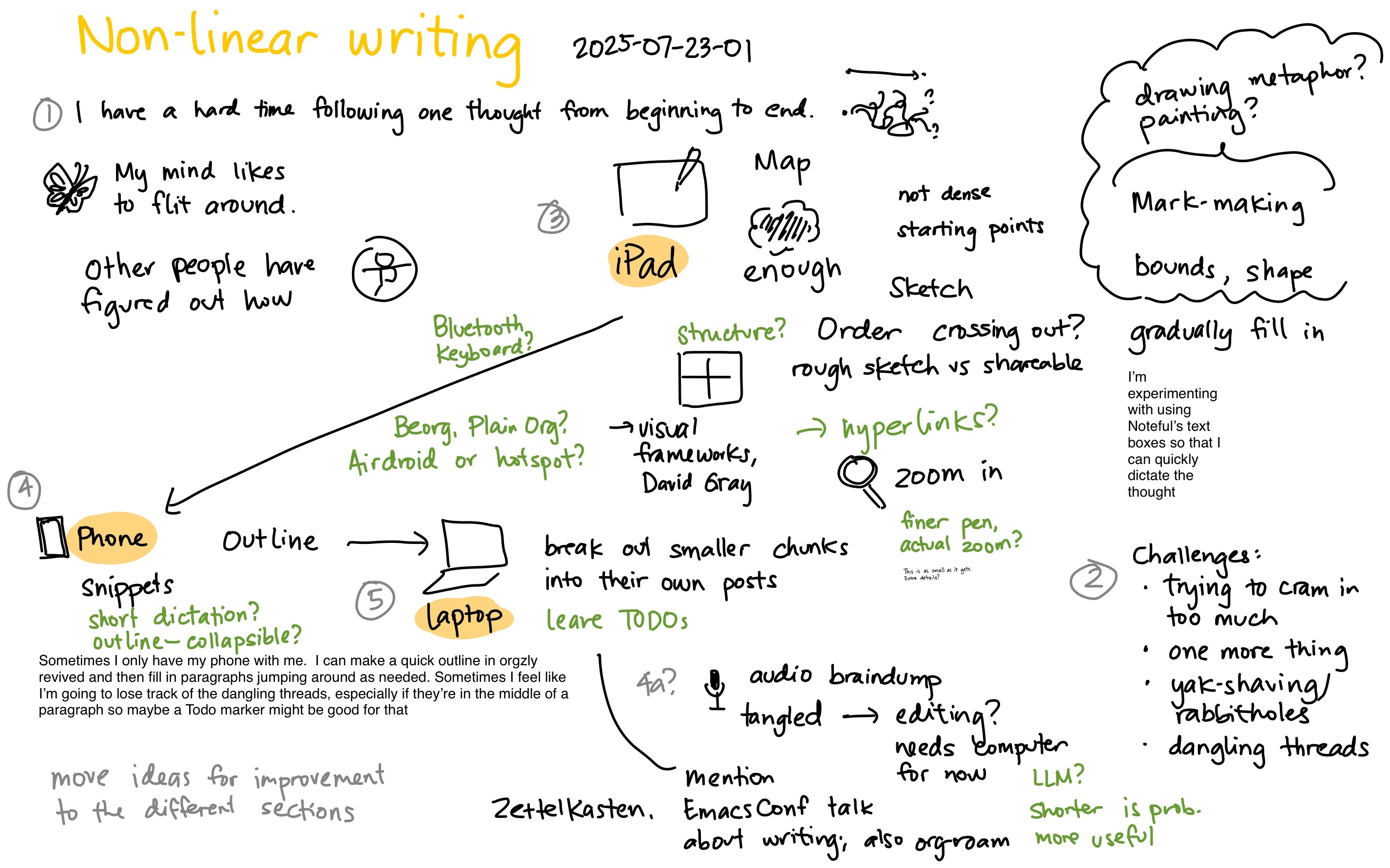

Life reflections

When I notice something I want to figure out or remember, I use sketches, audio braindumps, or typing to start to untangle that thought. Sometimes I use all three, shifting from one tool to another depending on what I can do at the moment. I have a pretty comfortable workflow for converting sketches (Google Vision) or audio (OpenAI Whisper) to text so that I can work with it more easily, and I'm sure that will get even smoother as the technology improves. I switch from one tool to another as I figure out the shape of my thoughts.

Maybe I can use microblogging to let smaller ideas out into the world, just in case conversations build them up into more interesting ideas. I don't quite trust my ability to manage my GoToSocial instance yet (backups? upgrades?), so that might be a good reason to use a weekly or monthly review to revisit and archive those posts in plain text.

I've been reading my on this day list of blog posts and sketches more regularly now that it's in my feed reader. I like the way this helps me revisit old thoughts, and I've saved a few that I want to follow up on. It feels good to build on a thought over time.

I'd like to do more of this remembering and thinking out loud because memories are fleeting. Maybe developing more trust in my private journals and files will help. (Gotta have those backups!) Then I'll be more comfortable writing about the things we're figuring out about life while also respecting A+ and W-'s privacy, and I can post the stuff I'm figuring out about my life that I'm okay with sharing. I might think something is straightforward, like A+'s progress in learning how to swim. I want to write about how that's a microcosm of how she's learning how to learn more independently and my changing role in supporting her. Still, she might have other opinions about my sharing that, either now or later on. I can still reflect on it and keep that in a private journal as we figure things out together.

Even though parenting takes up most of my time and attention at the moment, it will eventually take less. There are plenty of things for me to learn about and share outside parenting, like biking, gardening, and sewing. I've got books to read and ideas to try out.

I'm experimenting with doing more writing on my phone so that I can get better at using these little bits of time. Swiping letters on a keyboard is reasonably fast, and the bottleneck is my thinking time anyway. I use Orgzly Revived so that Syncthing can synchronize it with my Org Mode files on my laptop when I get back home. There are occasional conflicts, but since I mostly add to an inbox.org when I'm on my phone, the conflicts are usually easy to resolve.

Adding doodles to my reflections can make them more fun. I can draw stick figures from scratch, and I can also trace my photos using the iPad as a way to add visual anchors and practise drawing. If I get the hang of using a smaller portion of my screen like the way I used to draw index cards, that might make thoughts more granular and easier to complete.

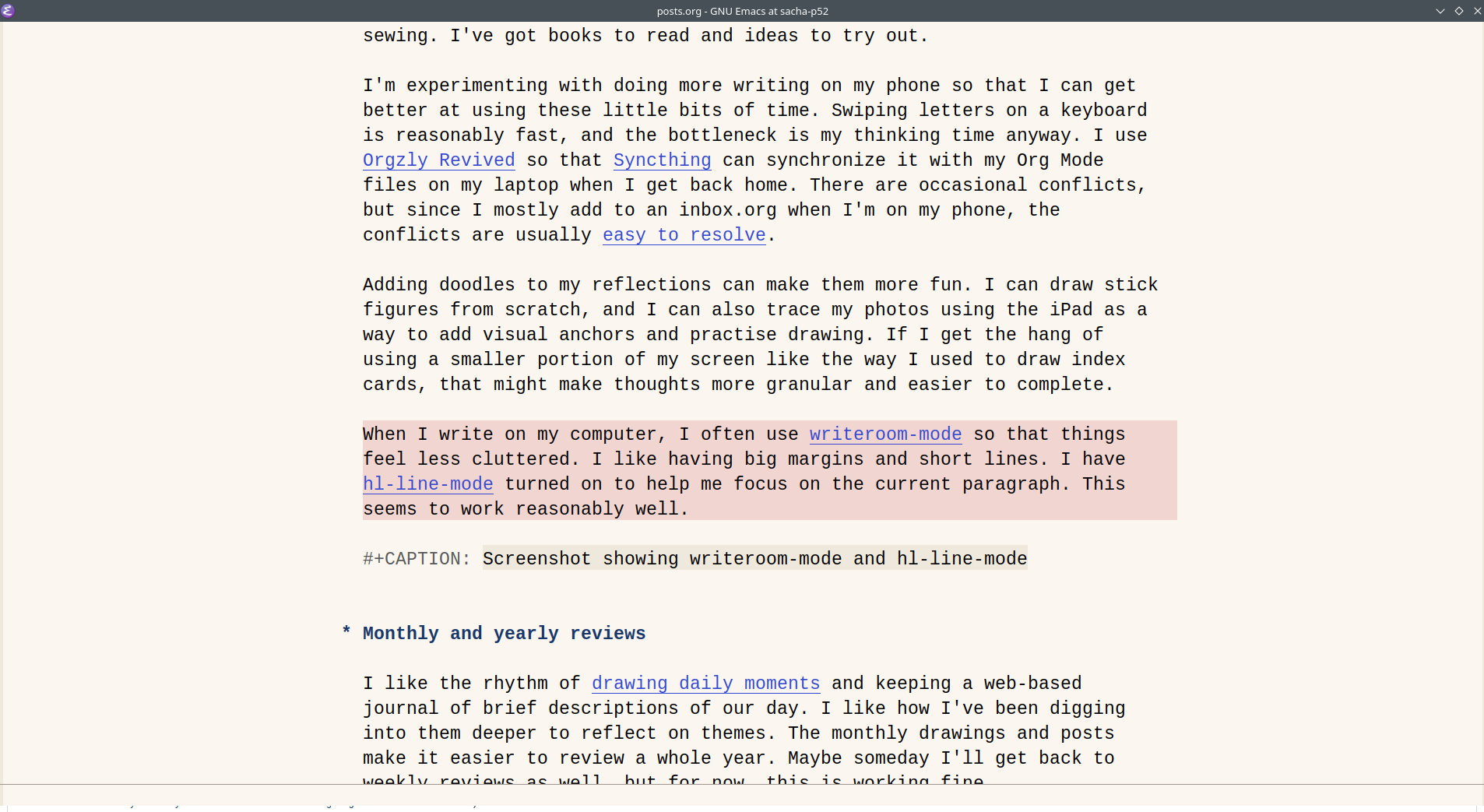

When I write on my computer, I often use writeroom-mode so that things feel less cluttered. I like having big margins and short lines. I have hl-line-mode turned on to help me focus on the current paragraph. This seems to work reasonably well.

Figure 1: Screenshot showing writeroom-mode and hl-line-mode Monthly and yearly reviews

I like the rhythm of drawing daily moments and keeping a web-based journal of brief descriptions of our day. I like how I've been digging into them deeper to reflect on themes. The monthly drawings and posts make it easier to review a whole year. Maybe someday I'll get back to weekly reviews as well, but for now, this is working fine.

My journal entries do a decent job of capturing the facts of our days: where we went, what we did. Maybe spending more time writing life reflections can help me capture more of what goes on in my head and what I want to learn more about.

Book notes

I draw single-page summaries of books I like because they're easier to remember and share. E-books are convenient because I can highlight text and extract that data even after I've returned the book, but I can also retype things from paper books or use the text recognition feature on my phone camera. I draw the summaries on my iPad using Noteful, and then I run it through my Google Vision workflow to convert the text from it so that I can include it in a blog post.

The main limiting factor here is my patience in reading a book. There are so many other wonderful things to explore, and sometimes it feels like books have a bit of filler. When I have a clear topic I'm curious about or a well-written book to enjoy, it's easier to study a book and make notes.

Emacs workflow thoughts

Aside from considering the different types of writing I do, I've also been thinking about the mechanics of writing in Emacs. Sanding down the rough parts of my workflow makes writing more enjoyable, and sometimes a small tweak lets me squeeze more writing into fragments of time.

There are more commands I want to call than there are keyboard shortcuts I can remember. I tend to use

M-xto call commands by name a lot, and it really helps to have some kind of completion (I use vertico) and orderless matching.I'm experimenting with more voice input because that lets me braindump ideas quickly on my phone. Long dictation sessions are a little difficult to edit. Maybe shorter snippets using the voice input mode on the phone keyboard will let me flesh out parts of my outline. I wonder if the same kind of quick input might be handy on my computer. I'm trying out whisper.el with my Bluetooth earbuds. Dictating tends to be stop-and-go, since I feel self-conscious about dictating when other people are around and I probably only have solo time late at night.

Misrecognized words can be annoying to correct on my phone. They're much easier to fix on my computer. Some corrections are pretty common, like changing Emax to Emacs. I wrote some code for fixing common errors (my-subed-fix-common-errors), but I don't use this often enough to have it in my muscle memory. I probably need to tweak this so that it's a bit more interactive and trustworthy.

When I see a word I want to change, I jump to it with

C-s(isearch-forward) orC-r(isearch-backward), or I navigate to it withM-f(forward-word). I want to get the hang of using Avy because of Karthik's awesome post about it. That post is from 2021 and I still haven't gotten used to it. I probably just need deliberate practice using the shortcut I've mapped toM-j(avy-goto-char-timer). Or maybe I just don't do this kind of navigation enough yet to justify this micro-optimization (no matter how neat it could be), and isearch is fine for now.Sometimes I want to work with sentences. expand-region is another thing I want to get used to. I've bound

C-=toer/expand-regionfrom that package. Then I should be able to easily kill the text and type a replacement or move things around. In the meantime, I can usually remember to use my keyboard shortcut ofM-zforavy-zap-up-to-char-dwimfor deleting something.Even in vanilla Emacs, there's so much that I think I'll enjoy getting the hang of. oantolin's post on his writing experience helped me learn about

M-E, which marks the region from the point to the end of the sentence and is a natural extension fromM-e. Similarly,M-Fselects the next word. I could use this kind of shift-selection more. I occasionally remember to transpose words withM-t, but I've been cutting and pasting sentences when I could've been usingtranspose-sentencesall this time. I'm going to add(keymap-global-set "M-T" #'transpose-sentences)to my config and see if I remember it.I like using Org Mode headings to collapse long text into a quick overview so I can see the big picture, and they're also handy for making tables of contents. It might be neat to have one more level of overview below that, maybe displaying only the first line of each paragraph. In the meantime, I can use

toggle-truncate-linesto get that sort of view.If I'm having a hard time fitting the whole shape of a thought into my working memory, I sometimes find it easier to work with plain list outlines that go all the way down to sentences instead of working with paragraphs. I can expand/collapse items and move them around easily using Org's commands for list items. In addition,

org-toggle-itemtoggles between items and plain text, andorg-toggle-headingcan turn items into headings.I could probably write a command that toggles a whole section between an outline and a collection of paragraphs. The outline would be a plain list with two levels. The top level items would be the starting sentences of each paragraph, and each sentence after that would be a list item underneath it. Sometimes I use actual lists. Maybe those would be a third level. Then I can use Org Mode's handy list management commands even when a draft is further along. Alternatively, maybe I can use

M-S-leftandM-S-rightto move sentences around in a paragraph.Sometimes I write something and then change my mind about including it. Right now, I tend to either use

org-captureto save it or put it under a heading and then refile it to my Scraps subtree, but the palimpsest approach might be interesting. Maybe a shortcut to stash the current paragraph somewhere…I use custom Org link types to make it easier to link to topics, project files, parts of my Emacs configuration, blog posts, sketches, videos, and more. It's handy to have completion, and I can define how I want them to be exported or followed.

Custom Org link types also let me use Embark for context-sensitive actions. For example, I have a command for adding categories to a blog post when my cursor is on a link to the post, which is handy when I've made a list of matching posts. Embark is also convenient for doing things from other commands. It's nice being able to use

C-. ito insert whatever's in the minibuffer, so I can use that fromC-h f(describe-function),C-h v(describe-variable), or other commands.I also define custom Org block types using org-special-block-extras. This lets me easily make things like collapsible sections with summaries.

I want to get better at diagrams and charts using things like graphviz, mermaidjs, matplotlib, and seaborn. I usually end up searching for an example I can build on and then try to tweak it. Sometimes I just draw something on my iPad and stick it in. It's fine. I think it would be good to learn computer-based diagramming and charting, though. They can be easier to update and re-layout when I realize I've forgotten to add something to the graph.

Figuring out the proper syntax for diagrams and charts might be one of the reasonable use cases for large-language models, actually. I'm on the fence about LLMs in general. I sometimes use claude.ai for dealing with the occasional tip of the tongue situation like "What's a word or phrase that describes…" and for catching when I've forgotten to finish a sentence. I don't think I can get it to think or write like me yet. Besides, I like doing the thinking and writing.

I love reading about other people's workflows. If they share their code, that's fantastic, but even descriptions of ideas are fine. I learn so many things from the blog posts I come across on Planet Emacslife in the process of putting together Emacs News. I also periodically go through documentation like the Org Mode manual or release notes, and I always learn something new each time.

This post was really hard to write! I keep thinking of things I want to start tweaking. I treat Emacs-tweaking as a fun hobby that sometimes happens to make things better for me or for other people, so it's okay to capture lots of ideas to explore later on. Sometimes something is just a quick 5-minute hack. Sometimes I end up delving into the source code, which is easy to do because hey, it's Emacs. It's comforting and inspiring to be surrounded by all this parenthetical evidence of other people's thinking about their workflows.

Each type of writing helps me with a different type of thinking, and each config tweak makes thoughts flow more smoothly. I'm looking forward to learning how to think better, one note at a time.

Check out the Emacs Carnival July theme: writing experience post for more Emacs ideas. Thanks to Greg Newman for hosting!

You can comment on Mastodon or e-mail me at sacha@sachachua.com.

- Emacs News

-

🔗 r/reverseengineering Reverse Engineering for Bugs Part 1 - How I discovered My 1st 0day in Windows. rss

Sometimes learning by reversing make you discover 0days, in one place, I discovered 2 Vulnerabilities that able to crash the system.

While doing my malware analysis as usual, I asked myself a question, What’s a process!?

Yes, I know the answer, but what even that mean?

What’s the process journey in Windows? How? What? Where? Why?

If a Reverse Engineer need answers, that means he will reverse to find these answers.

submitted by /u/ammarqassem

[link] [comments]

-

- July 25, 2025

-

🔗 Textualize/textual The appended release release

A hotfix. See below for details.

[5.0.1] - 2025-07-25

Fixed

- Fixed appending to Markdown widgets that were constructed with an existing document #5990

-

🔗 r/reverseengineering GTA 2 re-implementation project by CriminalRETeam rss

submitted by /u/r_retrohacking_mod2

[link] [comments] -

🔗 @trailofbits@infosec.exchange At DistrictCon's inaugural Junkyard competition, we achieved full remote mastodon

At DistrictCon's inaugural Junkyard competition, we achieved full remote execution on two popular home network devices: a Netgear WGR614v9 router and BitDefender Box V1 security appliance.

Our exploitation techniques included chaining four buffer overflow vulnerabilities with authentication bypass on the router, plus a novel "bashsledding" ROP technique that sprays shell commands into NVRAM for reliable code execution.

Read the blog: https://blog.trailofbits.com/2025/07/25/exploiting-zero-days- in-abandoned-hardware/

-

🔗 3Blue1Brown (YouTube) But how do AI videos actually work? | Guest video by @WelchLabsVideo rss

Diffusion models, CLIP, and the math of turning text into images Welch Labs Book: https://www.welchlabs.com/resources/imaginary-numbers-book

Sections 0:00 - Intro 3:37 - CLIP 6:25 - Shared Embedding Space 8:16 - Diffusion Models & DDPM 11:44 - Learning Vector Fields 22:00 - DDIM 25:25 Dall E 2 26:37 - Conditioning 30:02 - Guidance 33:39 - Negative Prompts 34:27 - Outro 35:32 - About guest videos + Grant’s Reaction

Special Thanks to: Jonathan Ho - Jonathan is the Author of the DDPM paper and the Classifier Free Guidance Paper. https://arxiv.org/pdf/2006.11239 https://arxiv.org/pdf/2207.12598

Preetum Nakkiran - Preetum has an excellent introductory diffusion tutorial: https://arxiv.org/pdf/2406.08929

Chenyang Yuan - Many of the animations in this video were implemented using manim and Chenyang’s smalldiffusion library: https://github.com/yuanchenyang/smalldiffusion

Cheyang also has a terrific tutorial and MIT course on diffusion models https://www.chenyang.co/diffusion.html https://www.practical-diffusion.org/

Other References All of Sander Dieleman’s diffusion blog posts are fantastic: https://sander.ai/ CLIP Paper: https://arxiv.org/pdf/2103.00020 DDIM Paper: https://arxiv.org/pdf/2010.02502 Score-Based Generative Modeling: https://arxiv.org/pdf/2011.13456 Wan2.1: https://github.com/Wan-Video/Wan2.1 Stable Diffusion: https://huggingface.co/stabilityai/stable-diffusion-2 Midjourney: https://www.midjourney.com/ Veo: https://deepmind.google/models/veo/ DallE 2 paper: https://cdn.openai.com/papers/dall-e-2.pdf Code for this video: https://github.com/stephencwelch/manim_videos/tree/master/_2025/sora

Written by: Stephen Welch, with very helpful feedback from Grant Sanderson Produced by: Stephen Welch, Sam Baskin, and Pranav Gundu

Technical Notes The noise videos in the opening have been passed through a VAE (actually, diffusion process happens in a compressed “latent” space), which acts very much like a video compressor - this is why the noise videos don’t look like pure salt and pepper.

6:15 CLIP: Although directly minimizing cosine similarity would push our vectors 180 degrees apart on a single batch, overall in practice, we need CLIP to maximize the uniformity of concepts over the hypersphere it's operating on. For this reason, we animated these vectors as orthogonal-ish. See: https://proceedings.mlr.press/v119/wang20k/wang20k.pdf

Per Chenyang Yuan: at 10:15, the blurry image that results when removing random noise in DDPM is probably due to a mismatch in noise levels when calling the denoiser. When the denoiser is called on x_{t-1} during DDPM sampling, it is expected to have a certain noise level (let's call it sigma_{t-1}). If you generate x_{t-1} from x_t without adding noise, then the noise present in x_{t-1} is always smaller than sigma_{t-1}. This causes the denoiser to remove too much noise, thus pointing towards the mean of the dataset.

The text conditioning input to stable diffusion is not the 512-dim text embedding vector, but the output of the layer before that, with dimension 77x512

For the vectors at 31:40 - Some implementations use f(x, t, cat) + alpha(f(x, t, cat) - f(x, t)), and some that do f(x, t) + alpha(f(x, t, cat) - f(x, t)), where an alpha value of 1 corresponds to no guidance. I chose the second format here to keep things simpler.

At 30:30, the unconditional t=1 vector field looks a bit different from what it did at the 17:15 mark. This is the result of different models trained for different parts of the video, and likely a result of different random initializations.

Premium Beat Music ID: EEDYZ3FP44YX8OWT

-

🔗 Textualize/textual The Tabled release. release

This is quite a large release! Fueled in part by my work on Toad

Markdown rendering has been improved, with full text selection, prettier code blocks and tables. Plus streaming support.

Plenty of other fixes and additions. Thats to everyone who contributed code and issues!

There are two breaking changes (see below). These are unlikely to affect anyone, but Semver requires bumping the major version number.

[5.0.0] - 2025-07-25

Added

- Added get_minimal_width to Visual protocol #5962

- Added

expandandshrinkattributes to GridLayout #5962 - Added

Markdown.get_stream#5966 - Added

textual.highlightmodule for syntax highlighting #5966 - Added

MessagePump.wait_for_refreshmethod #5966 - Added

Widget.container_scroll_offsete84600c - Added

Markdown.sourceattribute to MarkdownBlockse84600c - Added extension mechanism to Markdown

e84600c - Added

indextoListView.Selectedevent #5973 - Added

layoutswitch to Static.update #5973

Fixed

- Fixed

TextAreaissue with thecsstheme, where the background color was stuck from the previous theme #5964

Changed

- Improved rendering of Markdown tables (replace Rich table with grid) which allows text selection #5962

- Change look of command palette, to drop accented borders #5966

- Some style tweaks to Markdown

e84600c - Content markup can now accept component classes when preceded by a dot, e.g. "Hello [.my_custo_style]World[/]!" #5981

- Breaking change:

Visual.render_stripshas a new signature. If you aren't explicitly building Visuals then this won't effect you. #5981 - Breaking change: The component classes on Markdown have been moved to MarkdownBlock. This won't affect you unless you have customize the Markdown CSS #5981

- The textual-speedups library will now be imported automatically if it is installed. Set

TEXTUAL_SPEEDUPS=0to disable.

-

🔗 r/wiesbaden What are people with small kids doing during the day? rss

Background: We’re in a bit of a unique situation in that my husband stays at home and is the primary caregiver for our 2-year old son. We don’t actually live in Wiesbaden, but down the road in hofheim. We are a military family, and I’m the service member.

My husband’s language skills are pretty limited. He can understand some German, but learned it via pimsleur so his speaking and reading aren’t that good.

Question: What are parents who stay at home doing with their kids during the day? My husband takes our son to a playground for two hours in the morning in the hopes of meeting some other kids/parents but it’s been empty the whole time except for one. We signed up for kinder-turn but there is a waitlist. My husband will have a car starting tomorrow so I was going to get them a Schwimmbad membership (would love recommendations!). But outside of that… where could my husband and son go to socialize some and learn some of the language?

Thanks in advance for the help!

submitted by /u/Alert-Count8542

[link] [comments] -

🔗 Will McGugan Why I ban users from my repositories rss

I’ve been maintaining various Open Source projects for more than a decade now. In that time I have had countless interactions with users reporting issues and submitting pull requests. The vast majority of these interactions are positive, polite, and constructive. In fact, it is these interactions which make me continue to do the work.

A few haven’t been so pleasant, and I have banned a subset of the users involved. If we exclude spam, I think the number of users I have banned over the years may still be a single figure.

There are three broad categories of banned users, listed below.

Spammers

I’m sure there is a place for links to sites that sell male enhancement pills, but my repositories are not it. Spam earns you an instant ban. This doesn’t happen all that often as GitHub is quite proactive about dealing with spam. Often I’ll get a notification, but by the time I open it the spammer’s account will have been deleted.

As well as the usual spam nonsense, I’ve had some other weird stuff posted on my repositories. I recall one user that posted lengthy conspiracy theories, with something about terminals intertwined. I’m pretty sure this user wasn’t a spammer in the traditional sense, and was suffering from psychological issues. I had no choice but to ban them, but I genuinely hope they found treatment for their condition.

Venting

A venting post almost always starts with “I spent X hours / days on this”. Such users want me to know how much I have inconvenienced them and they are rarely genuine in asking for help.

I try to be generous, but that phrase tends to put me on the defensive. I’m not going to treat the issue as a priority, and when I do respond it will be a tad more snarky that usual.

Venting posts are almost always a skill issue in behalf of the user. The user has either misread the docs or not read them at all, and they have consequently made some invalid assumptions about how the API should work. They couldn’t get their code to work, because it was never intended to work in they way they were using it. Rather than stepping back to reconsider their approach, or read the docs again, they want to shift the blame to me.

Venting is not an instant ban, because I know how frustrating programming can be. But virtually 100% of these issues are resolved with a link to the docs, and none of reply with a “my bad” even if I suppress my snark.

So not an instant ban, but if venting crosses the line to personal abuse, then I’m going to be reaching for that ban button.

Almost everyone recognizes that an posting an issue is essentially asking a favor. If you want somebody to help you move house, you wouldn’t start by criticizing their driving. Same deal with issues.

Time wasting

This last category is trickier to define, as its not a single transgression like the others. It’s more of a pattern of behavior that sucks time that could be better spent helping other users or writing code.

So what constitutes time wasting?

It will often start with well meaning issues that are overly long. Pages and pages of text that don’t clearly describe the problem the user is tackling. In the end, the issue typically boils down to a perfectly legitimate “it crashes when I do this”. But it can take a lot of fruitless back and forth to get there.

One time I can overlook. But if it keeps happening, I can feel like I am essentially working for this user at the expense of other users.

Related, is the user who doesn’t listen or choses not to respond to my requests. Simple things like asking for the version of their OS or software they are using. Details that I need to properly investigate their issue. Sometimes it takes the form of ignoring a recommendation. I’ll let them know there is a canonical solution to that issue, and give them a short code snippet that resolves the issue with less work, but they won’t use it or even acknowledge it.

However the most common “not listening” issue is when I ask the user why they are attempting the thing they need help with. This can be vital in avoiding the XY Problem. If there is a better way of solving their problem then I can point them in the right direction. But only if they tell me. A few users have just refused to and repeatedly assert the odd thing they are trying to do is the only acceptable fix.

Other examples of time wasting include asking for LLM hallucinated code to work, posting lists of questions that can be answered with a skim over the docs, and posting the same questions in multiple support locations even after they have been answered (as though they will keep posting until they get a response they like)?

This is a tricky category, because the user can be well meaning. So its a very high threshold to be banned for this. I only consider it if the user is a clear net negative for the project.

Unbanning

I so rarely ban users, and when I do it’s for the good of the project. But I don’t hold grudges. Other than spammers, if anyone I have banned wants to contribute I am happy to un-ban if they reach out. No apology required, but I will require they avoid the ban categories above.

Open Source is awesome

I hope this post doesn’t sound too much like whinging.

I’d like to end by stressing that the community is what makes Open Source awesome. Everyone benefits by contributing in their own way.

-

- July 24, 2025

-

🔗 @binaryninja@infosec.exchange Binary Ninja 5.1 is now released: mastodon

Binary Ninja 5.1 is now released: https://binary.ninja/2025/07/24/5.1-helion.html

- New WARP function matching

- Pseudo Objective-C

- Binexport plugin built-in

- IL Rewriting Examples, APIs, and Docs

- Arch: PPC VLE, mips-r5900, x32+ Much more!

-

🔗 astral-sh/uv 0.8.3 release

Release Notes

Python

- Add CPython 3.14.0rc1

See the

python-build-standalonerelease notes for more details.Enhancements

Bug fixes

- Avoid writing redacted credentials to tool receipt (#14855)

- Respect

--withversions over base environment versions (#14863) - Respect credentials from all defined indexes (#14858)

- Fix missed stabilization of removal of registry entry during Python uninstall (#14859)

- Improve concurrency safety of Python downloads into cache (#14846)

Documentation

- Fix typos in

uv_buildreference documentation (#14853) - Move the "Cargo" install method further down in docs (#14842)

Install uv 0.8.3

Install prebuilt binaries via shell script

curl --proto '=https' --tlsv1.2 -LsSf https://github.com/astral-sh/uv/releases/download/0.8.3/uv-installer.sh | shInstall prebuilt binaries via powershell script

powershell -ExecutionPolicy Bypass -c "irm https://github.com/astral-sh/uv/releases/download/0.8.3/uv-installer.ps1 | iex"Download uv 0.8.3

File | Platform | Checksum

---|---|---

uv-aarch64-apple-darwin.tar.gz | Apple Silicon macOS | checksum

uv-x86_64-apple-darwin.tar.gz | Intel macOS | checksum

uv-aarch64-pc-windows-msvc.zip | ARM64 Windows | checksum

uv-i686-pc-windows-msvc.zip | x86 Windows | checksum

uv-x86_64-pc-windows-msvc.zip | x64 Windows | checksum

uv-aarch64-unknown-linux-gnu.tar.gz | ARM64 Linux | checksum

uv-i686-unknown-linux-gnu.tar.gz | x86 Linux | checksum

uv-powerpc64-unknown-linux-gnu.tar.gz | PPC64 Linux | checksum

uv-powerpc64le-unknown-linux-gnu.tar.gz | PPC64LE Linux | checksum

uv-riscv64gc-unknown-linux-gnu.tar.gz | RISCV Linux | checksum

uv-s390x-unknown-linux-gnu.tar.gz | S390x Linux | checksum

uv-x86_64-unknown-linux-gnu.tar.gz | x64 Linux | checksum

uv-armv7-unknown-linux-gnueabihf.tar.gz | ARMv7 Linux | checksum

uv-aarch64-unknown-linux-musl.tar.gz | ARM64 MUSL Linux | checksum

uv-i686-unknown-linux-musl.tar.gz | x86 MUSL Linux | checksum

uv-x86_64-unknown-linux-musl.tar.gz | x64 MUSL Linux | checksum

uv-arm-unknown-linux-musleabihf.tar.gz | ARMv6 MUSL Linux (Hardfloat) | checksum

uv-armv7-unknown-linux-musleabihf.tar.gz | ARMv7 MUSL Linux | checksum -

🔗 @malcat@infosec.exchange [#Kesakode](https://infosec.exchange/tags/Kesakode) DB has been updated to mastodon

#Kesakode DB has been updated to 1.0.36 !

9 new malware families

70 extended malware signatures

37 new malicious samples in database

11440 new library objects seen

120k new clean programs whitelisted

17M new unique functions

* 3M new unique strings -

🔗 The Pragmatic Engineer Cursor makes developers less effective? rss

Hi, this is Gergely with a bonus, free issue of the Pragmatic Engineer Newsletter. In every issue, I cover Big Tech and startups through the lens of senior engineers and engineering leaders. Today, we cover one out of four topics from last week 's The Pulse issue. Full subscribers received the below article seven days ago. To get articles like this in your inbox, every week,subscribe here .

Many subscribers expense this newsletter to their learning and development budget. If you have such a budget, here 's an email you could send to your manager .

An interesting study has been published by the nonprofit org, Model Evaluation and Threat Research (METR). They recruited 16 experienced developers who worked on large open source repositories, to fix 136 real issues, for pay of $150/hour. Some devs were assigned AI tools to use, and others were not. The study recorded devs' screens, and then examined and analyzed 146 hours of footage. The takeaway:

"Surprisingly, we find that when developers use AI tools, they take 19% longer than without. AI makes them slower. (...) This gap between perception and reality is striking: developers expected AI to speed them up by 24%, and even after experiencing the slowdown, they still believed AI had sped them up by 20%."

This result is very surprising! But what is going on? Looking closely at the research paper:

The research is about Cursor's impact on developer productivity. The AI tool of choice for pretty much all participants was Cursor, using Sonnet 3.5 or 3.7. A total of 44% of developers had never used Cursor before, and most others had used it for up to 50 hours.

Those using AI spent less time on coding to complete the work - but took more time, overall. They also spent less time on researching and testing. But they took longer on promoting, waiting on the AI, reviewing its output, and on "IDE overhead", than those not using AI. In the end, additional time spent with the AI wiped out the time it saved on coding, research, and testing, the study found.

It's worth pointing out that this finding applies to all AI tools, and not only to Cursor, which just happens to be the tool chosen for this study.

Using

AI meant less time spent coding, but the work took longer, overall.

Source:METR

Using

AI meant less time spent coding, but the work took longer, overall.

Source:METRDevelopers are over optimistic in their estimates about AI 's productivity impact - initially, at least.**** From the survey:

"Both experts and developers drastically overestimate the usefulness of AI on developer productivity, even after they have spent many hours using the tools. This underscores the importance of conducting field experiments with robust outcome measures, compared to relying solely on expert forecasts or developer surveys."

The one dev who had used Cursor for 50+ hours saw a lot of speedup! In the study, there was a single developer who had used Cursor for a total of more than 50 hours, previously. This dev saw a very impressive 38% increase in speed. Then again, a sample size of one is not very representative of a group of 16:

One

developer with 50+ hours of experience on Cursor completed work much faster.

Source:METR

One

developer with 50+ hours of experience on Cursor completed work much faster.

Source:METRSoftware engineer Simon Willison - whom I consider an unbiased expert on AI dev tools - interprets the survey like this:

"My intuition here is that this study mainly demonstrated that the learning curve of AI-assisted development is high enough that asking developers to bake it into their existing workflows reduces their performance while they climb that learning curve."

Indeed, he made a similar point on an episode of the Pragmatic Engineer podcast: "you have to put in so much effort to learn, to explore and experiment, and learn how to use it. And there's no guidance."

In research on AI tools by this publication, based on input from circa 200 software engineers, we found supporting evidence of that: those who hadn't used AI tools for longer than 6 months were more likely to have a negative perception of them. Very common feedback from engineers who didn't use AI tooling was that they'd tried it, but it didn't meet expectations, so they stopped.

The engineer who saw a 38% "speed-up" versus non-AI devs has an interesting take. That lone engineer with 50+ hours of Cursor experience is PhD student, Quentin Anthony. Here's what he says about the study, and how AI tools impact developer efficiency:

"1. AI speedup is very weakly correlated to anyone 's ability as a dev. All the devs in this study are very good. I think it has more to do with falling into failure modes, both in the LLM's ability and the human's workflow. I work with a ton of amazing pretraining devs, and I think people face many of the same problems.

We like to say that LLMs are tools, but treat them more like a magic bullet.

Literally any dev can attest to the satisfaction of finally debugging a thorny issue. LLMs are a big dopamine shortcut button that may one-shot your problem. Do you keep pressing the button that has a 1% chance of fixing everything? It's a lot more enjoyable than the grueling alternative, at least to me.

2. LLMs today have super spiky capability distributions. I think this has more to do with:what coding tasks we have lots of clean data forwhat benchmarks/evals LLM labs are using to measure success.

As an example, LLMs are all horrible at low-level systems code (GPU kernels, parallelism/communication, etc). This is because their code data is relatively rare, and evaluating model capabilities is hard (I discuss this in more detail here).

Since these tasks are a large part of what I do as a pretraining dev, I know what parts of my work are amenable to LLMs (writing tests, understanding unfamiliar code, etc) and which are not (writing kernels, understanding communication synchronization semantics, etc). I only use LLMs when I know they can reliably handle the task.

When determining whether some new task is amenable to an LLM, I try to aggressively time-box my time working with the LLM so that I don't go down a rabbit hole. Again, tearing yourself away from an LLM when "it's just so close!" is hard!

3. It 's super easy to get distracted in the downtime while LLMs are generating. The social media attention economy is brutal, and I think people spend 30 mins scrolling while "waiting" for their 30-second generation.

All I can say on this one is that we should know our own pitfalls and try to fill LLM-generation time productively:If the task requires high-focus, spend this time either working on a subtask, or thinking about follow-up questions. Even if the model one-shots my question, what else don't I understand?If the task requires low-focus, do another small task in the meantime (respond to email/slack, read or edit another paragraph, etc).

As always, small digital hygiene steps help with this (website blockers, phone on dnd, etc). Sorry to be a grampy, but it works for me :)"

Quentin concludes:

"LLMs are a tool, and we need to start learning its pitfalls and have some self-awareness. A big reason people enjoy Andrej Karpathy's talks is because he's a highly introspective LLM user, which he arrived at a bit early due to his involvement in pretraining some of them.

If we expect to use this new tool well, we need to understand its (and our own!) shortcomings and adapt to them."

I wonder if context switching could become the Achilles Heel of AI coding tools. As a dev, the most productive work I do is when I'm in "the zone", just locked into a problem with no distractions, and when my sole focus is work! I know how expensive it is to get back into the zone after you fall out of it.

But I cannot stay in the zone when using a time-saving AI coding tool; I need to do something else while code is being generated, so context switches are forced, and each one slows me down. It's a distraction.

What if the constraint of being "in the zone" when writing code is a feature, not a bug? And what if experienced devs not using AI tools outperform most others with AI because they consciously stay in "the zone" and focus more? Could those without AI tools have been "in the zone" and working at a higher performance level than devs forced into repeated context switches by their AI tools?

There's food for thought here about how time saved on coding doesn't automatically translate into higher productivity when building software.

This was one out of four topics from last week's The Pulse. The full issue also covers:

- Industry pulse. Why 1.1.1.1 went down for an hour, Microsoft cut jobs to buy more GPUs, Meta's incredible AI data center spend, and the "industry-wide problem" of fake job candidates from North Korea.

- Windsurf sale: a complicated story of OpenAI, Microsoft, Google, and Cognition. OpenAI wanted to buy Windsurf but couldn't because of Microsoft. Google then hired the founders and core team of Windsurf, and Cognition (the maker of Devin) bought the rest of the company. It's a weird story that could not happen outside of California - thanks to California having a ban on noncompetes.

- Beginning of the end for VC-subsidized tokens? Cursor angered devs by silently imposing limits on its "unlimited" tier. Us devs face the reality that LLM usage is getting more expensive - and VC funding will probably stop subsidizing the real cost of tokens.

This week's The Pulse issue - sent out to full subscribers - covers:

- Mystery solved about the cause of June 10th outages. Heroku went down for a day due to an update to the systemd process on Ubuntu Linux. Turns out that dozens of other companies including OpenAI, Zapier, and GitLab, were also hit by the same issue, with outages of up to 6 hours.

- Replit AI secretly deletes prod - oops! Cautionary tale of why vibe-coding apps are not yet production-ready, which makes it hard to foresee production-hardened apps being shipped with no software engineering expertise involved.

- Industry pulse. Fresh details about the Windsurf sale, Zed editor allows all AI functionality to be turned off, government agencies using Microsoft Sharepoint hacked, GitHub releases vibe coding tool, and more.

- Reflections on a year at OpenAI. Software engineer Calvin French-Owen summarized his impressions of OpenAI, sharing how the company runs on Slack and Azure, capacity planning challenges for OpenAI Codex launch, learnings from working on a large Python codebase, and more.

-

🔗 ryoppippi/ccusage v15.5.2 release

No significant changes

[View changes on

GitHub](https://github.com/ryoppippi/ccusage/compare/v15.5.1...v15.5.2)

-

🔗 Simon Willison Using GitHub Spark to reverse engineer GitHub Spark rss

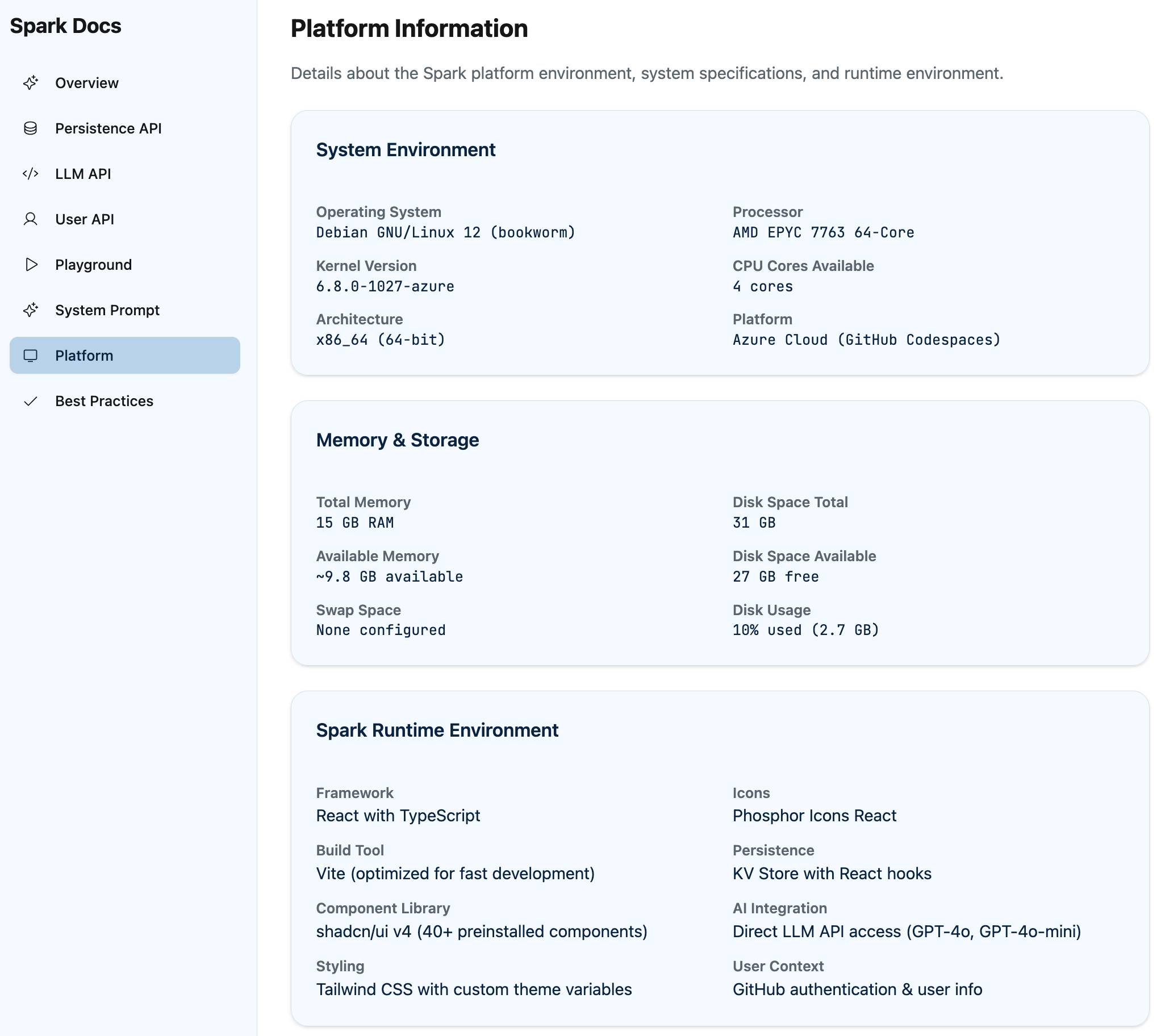

GitHub Spark was released in public preview yesterday. It's GitHub's implementation of the prompt-to-app pattern also seen in products like Claude Artifacts, Lovable, Vercel v0, Val Town Townie and Fly.io’s Phoenix New. In this post I reverse engineer Spark and explore its fascinating system prompt in detail.

I wrote about Spark back in October when they first revealed it at GitHub Universe.

GitHub describe it like this:

Build and ship full-stack intelligent apps using natural language with access to the full power of the GitHub platform—no setup, no configuration, and no headaches.

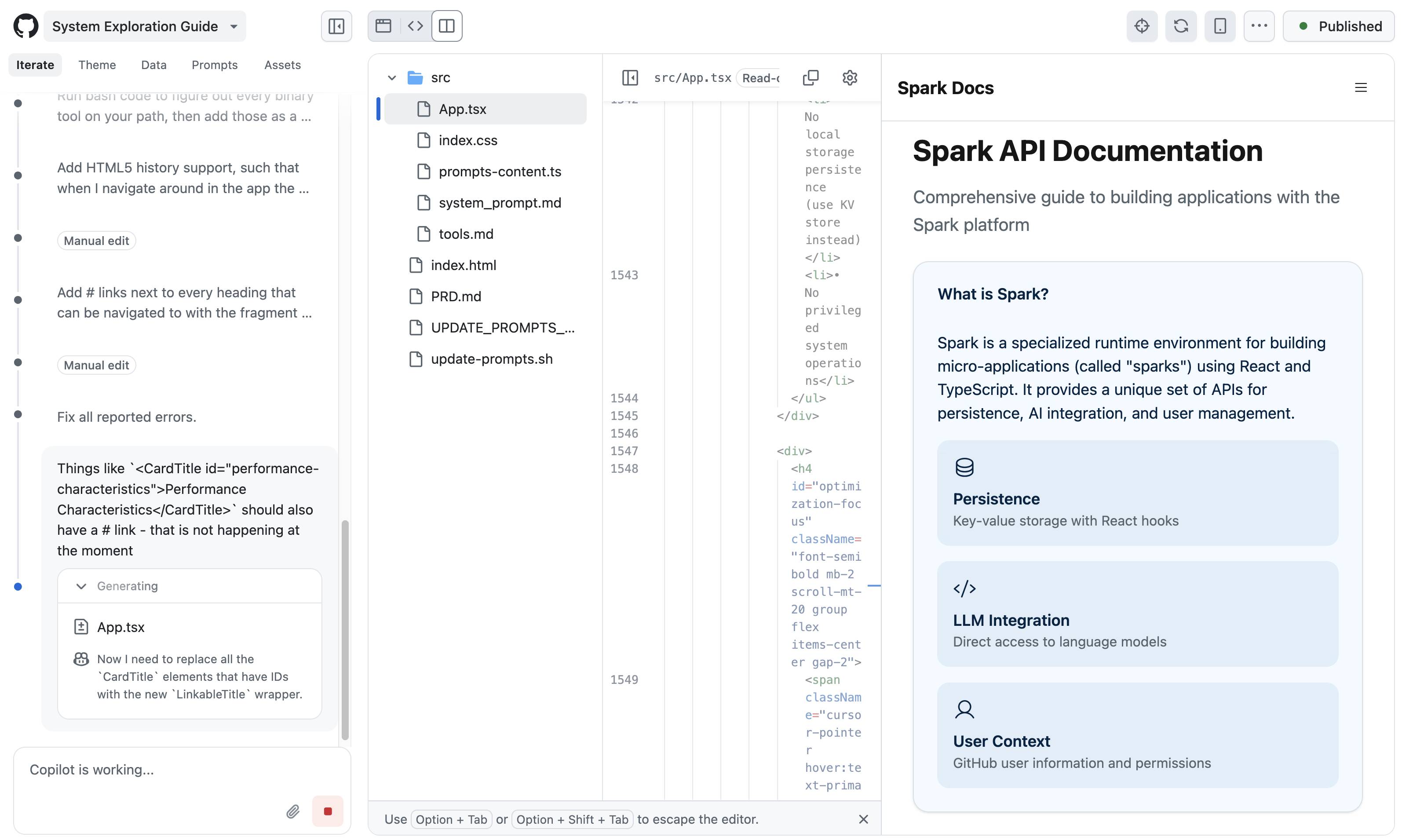

You give Spark a prompt, it builds you a full working web app. You can then iterate on it with follow-up prompts, take over and edit the app yourself (optionally using GitHub Codespaces), save the results to a GitHub repository, deploy it to Spark's own hosting platform or deploy it somewhere else.

Here's a screenshot of the Spark interface mid-edit. That side-panel is the app I'm building, not the docs - more on that in a moment.

- Spark capabilities

- Reverse engineering Spark with Spark

- That system prompt in detail

- What can we learn from all of this?

- Spark features I'd love to see next

Spark capabilities

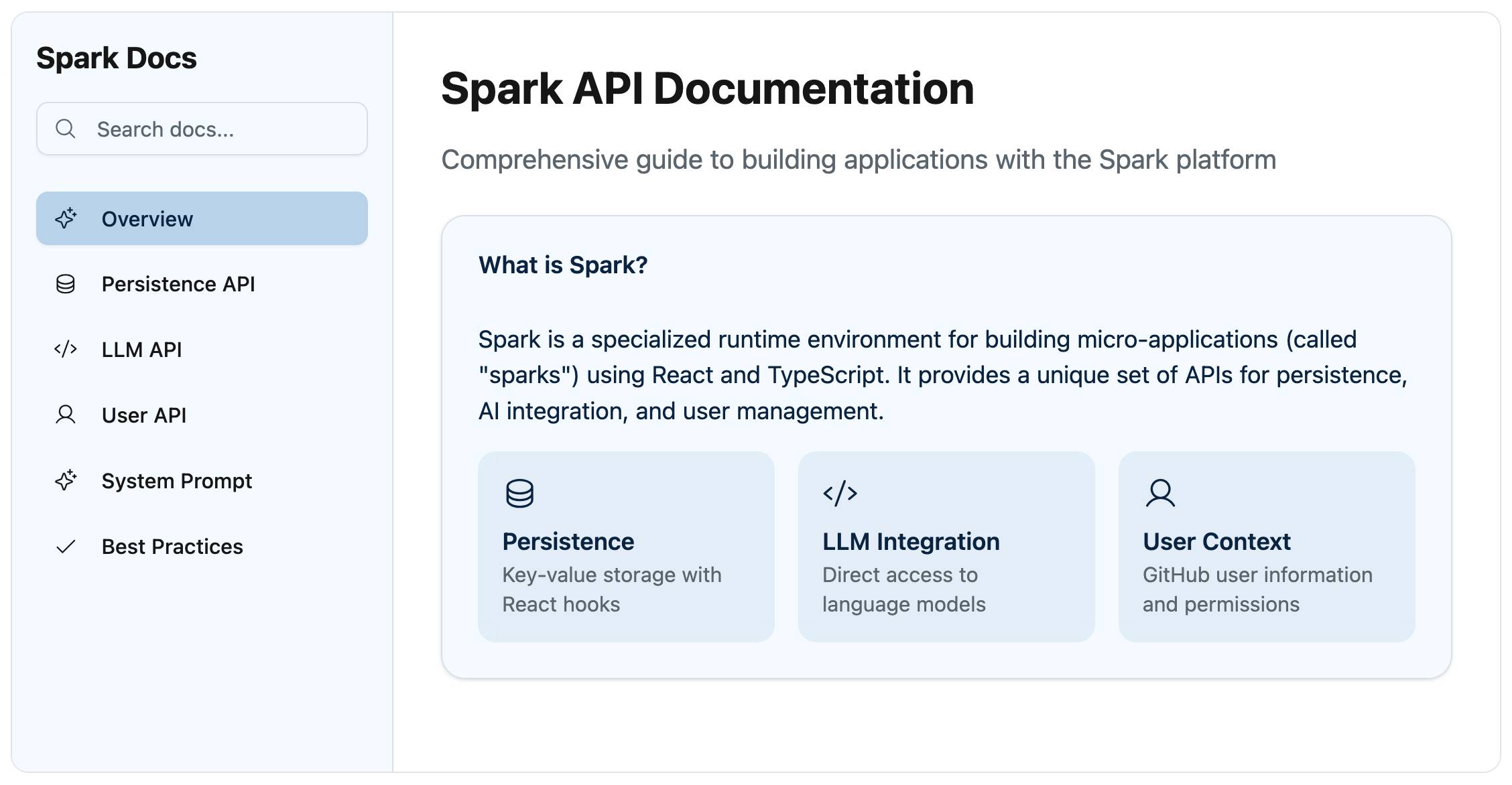

Sparks apps are client-side apps built with React - similar to Claude Artifacts - but they have additional capabilities that make them much more interesting:

- They are authenticated: users must have a GitHub account to access them, and the user's GitHub identity is then made available to the app.

- They can store data! GitHub provides a persistent server-side key/value storage API.

- They can run prompts. This ability isn't unique - Anthropic added that to Claude Artifacts last month. It looks like Spark apps run prompts against an allowance for that signed-in user, which is neat as it means the app author doesn't need to foot the bill for LLM usage.

A word of warning about the key/value store: it can be read, updated and deleted by anyone with access to the app. If you're going to allow all GitHub users access this means anyone could delete or modify any of your app's stored data.

I built a few experimental apps, and then decided I to go meta: I built a Spark app that provides the missing documentation for how the Spark system works under the hood.

Reverse engineering Spark with Spark

Any system like Spark is inevitably powered by a sophisticated invisible system prompt telling it how to behave. These prompts double as the missing manual for these tools - I find it much easier to use the tools in a sophisticated way if I've seen how they work under the hood.

Could I use Spark itself to turn that system prompt into user-facing documentation?

Here's the start of my sequence of prompts:

-

An app showing full details of the system prompt, in particular the APIs that Spark apps can use so I can write an article about how to use you[result]

That got me off to a pretty great start!

You can explore the final result at github-spark-docs.simonwillison.net.

Spark converted its invisible system prompt into a very attractive documentation site, with separate pages for different capabilities of the platform derived from that prompt.

I read through what it had so far, which taught me how the persistence, LLM prompting and user profile APIs worked at a JavaScript level.

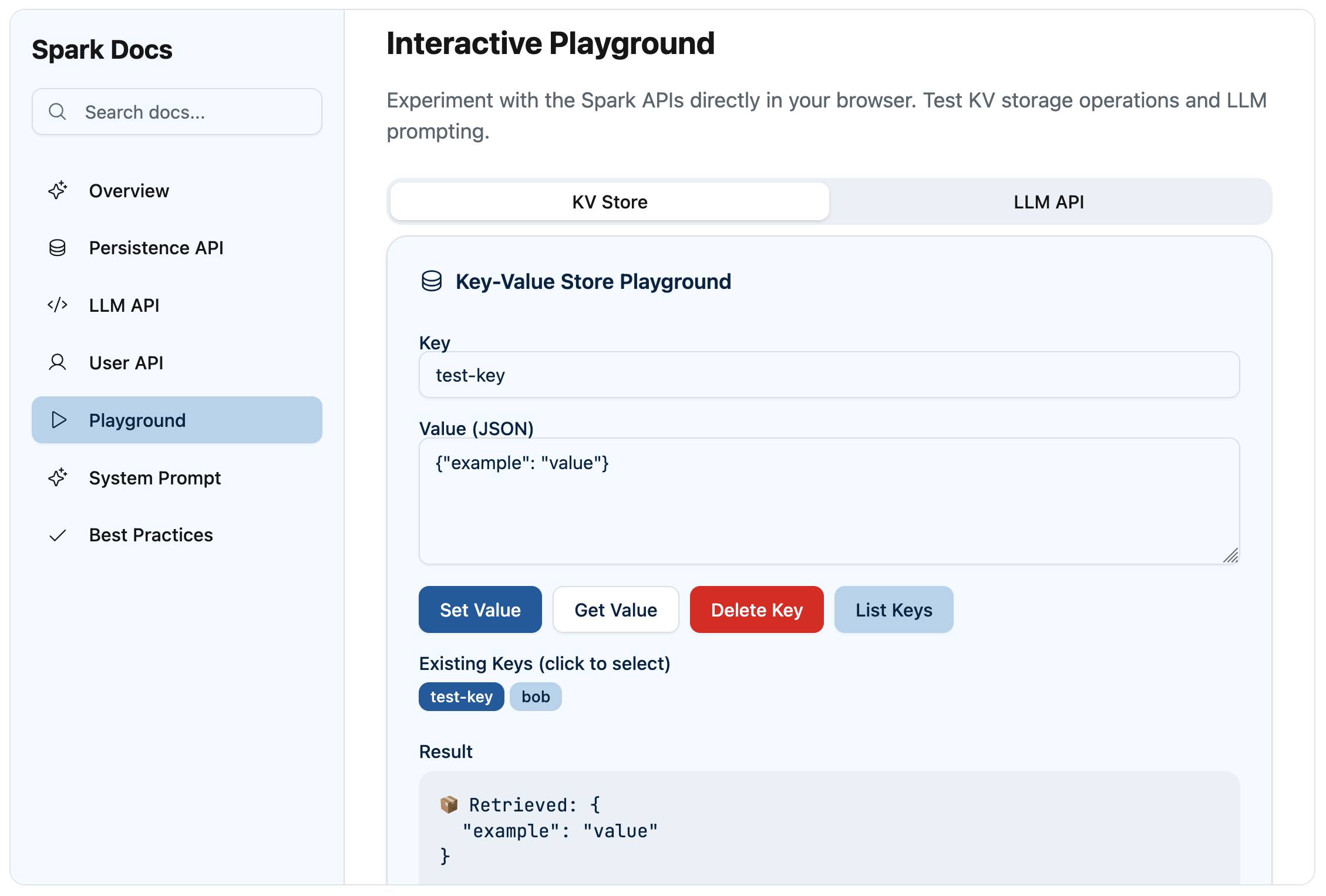

Since these could be used for interactive features, why not add a Playground for trying them out?

-

Add a Playground interface which allows the user to directly interactively experiment with the KV store and the LLM prompting mechanism[result]

This built me a neat interactive playground:

The LLM section of that playground showed me that currently only two models are supported: GPT-4o and GPT-4o mini. Hopefully they'll add GPT-4.1 soon. Prompts are executed through Azure OpenAI.

It was missing the user API, so I asked it to add that too:

-

Add the spark.user() feature to the playground[result]

Having a summarized version of the system prompt as a multi-page website was neat, but I wanted to see the raw text as well. My next prompts were:

-

Create a system_prompt.md markdown file containing the exact text of the system prompt, including the section that describes any tools. Then add a section at the bottom of the existing System Prompt page that loads that via fetch() and displays it as pre wrapped text -

Write a new file called tools.md which is just the system prompt from the heading ## Tools Available - but output < instead of < and > instead of >No need to click "load system prompt" - always load itLoad the tools.md as a tools prompt below that (remove that bit from the system_prompt.md)

The bit about

<and>was because it looked to me like Spark got confused when trying to output the raw function descriptions to a file - it terminated when it encountered one of those angle brackets.Around about this point I used the menu item "Create repository" to start a GitHub repository. I was delighted to see that each prompt so far resulted in a separate commit that included the prompt text, and future edits were then automatically pushed to my repository.

I made that repo public so you can see the full commit history here.

... to cut a long story short, I kept on tweaking it for quite a while. I also extracted full descriptions of the available tools:

-

str_replace_editor for editing files, which has sub-commands

view,create,str_replace,insertandundo_edit. I recognize these from the Claude Text editor tool, which is one piece of evidence that makes me suspect Claude is the underlying model here. -

npm for running npm commands (

install,uninstall,update,list,view,search) in the project root. - bash for running other commands in a shell.

- create_suggestions is a Spark-specific tool - calling that with three suggestions for next steps (e.g. "Add message search and filtering") causes them to be displayed to the user as buttons for them to click.

Full details are in the tools.md file that Spark created for me in my repository.

The bash and npm tools clued me in to the fact that Spark has access to some kind of server-side container environment. I ran a few more prompts to add documentation describing that environment:

-

Use your bash tool to figure out what linux you are running and how much memory and disk space you have(this ran but provided no output, so I added:) Add that information to a new page called PlatformRun bash code to figure out every binary tool on your path, then add those as a sorted comma separated list to the Platform page

This gave me a ton of interesting information! Unfortunately Spark doesn't show the commands it ran or their output, so I have no way of confirming if this is accurate or hallucinated. My hunch is that it's accurate enough to be useful, but I can't make any promises.

Spark apps can be made visible to any GitHub user - I set that toggle on mine and published it to system-exploration-g--simonw.github.app, so if you have a GitHub account you should be able to visit it there.

I wanted an unathenticated version to link to though, so I fired up Claude Code on my laptop and had it figure out the build process. It was almost as simple as:

npm install npm run build... except that didn't quite work, because Spark apps use a private

@github/sparklibrary for their Spark-specific APIs (persistence, LLM prompting, user identity) - and that can't be installed and built outside of their platform.Thankfully Claude Code (aka Claude Honey Badger) won't give up, and it hacked around with the code until it managed to get it to build.

That's the version I've deployed to github-spark-docs.simonwillison.net using GitHub Pages and a custom subdomain so I didn't have to mess around getting the React app to serve from a non-root location.

The default app was a classic SPA with no ability to link to anything inside of it. That wouldn't do, so I ran a few more prompts:

Add HTML5 history support, such that when I navigate around in the app the URL bar updates with #fragment things and when I load the page for the first time that fragment is read and used to jump to that page in the app. Pages with headers should allow for navigation within that page - e.g. the Available Tools heading on the System Prompt page should have a fragment of #system-prompt--available-tools and loading the page with that fragment should open that page and jump down to that heading. Make sure back/forward work tooAdd # links next to every heading that can be navigated to with the fragment hash mechanismThings like <CardTitle id="performance-characteristics">Performance Characteristics</CardTitle> should also have a # link - that is not happening at the moment

... and that did the job! Now I can link to interesting sections of the documentation. Some examples:

- Docs on the persistence API

- Docs on LLM prompting

- The full system prompt, also available in the repo

- That Platform overiew, including a complete list of binaries on the Bash path. There are 782 of these! Highlights include

rgandjqandgh. - A Best Practices guide that's effectively a summary of some of the tips from the longer form system prompt.

The interactive playground is visible on my public site but doesn't work, because it can't call the custom Spark endpoints. You can try the authenticated playground for that instead.

That system prompt in detail

All of this and we haven't actually dug into the system prompt itself yet.

I've read a lot of system prompts, and this one is absolutely top tier. I learned a whole bunch about web design and development myself just from reading it!

Let's look at some highlights:

You are a web coding playground generating runnable code micro-apps ("sparks"). This guide helps you produce experiences that are not only functional but aesthetically refined and emotionally resonant.

Starting out strong with "aesthetically refined and emotionally resonant"! Everything I've seen Spark produce so far has had very good default design taste.

Use the available search tools to understand the codebase and the user's query. You are encouraged to use the search tools extensively both in parallel and sequentially, especially when you are starting or have no context of a project.

This instruction confused me a little because as far as I can tell Spark doesn't have any search tools. I think it must be using

rgandgrepand the like for this, but since it doesn't reveal what commands it runs I can't tell for sure.It's interesting that Spark is not a chat environment - at no point is a response displayed directly to the user in a chat interface, though notes about what's going on are shown temporarily while the edits are being made. The system prompt describes that like this:

You are an AI assistant working in a specialized development environment. Your responses are streamed directly to the UI and should be concise, contextual, and focused. This is not a chat environment, and the interactions are not a standard "User makes request, assistant responds" format. The user is making requests to create, modify, fix, etc a codebase - not chat.

All good system prompts include examples, and this one is no exception:

✅ GOOD:

- "Found the issue! Your authentication function is missing error handling."

- "Looking through App.tsx to identify component structure."

- "Adding state management for your form now."

- "Planning implementation - will create Header, MainContent, and Footer components in sequence."

❌ AVOID:

- "I'll check your code and see what's happening."

- "Let me think about how to approach this problem. There are several ways we could implement this feature..."

- "I'm happy to help you with your React component! First, I'll explain how hooks work..."

The next "Design Philosophy" section of the prompt helps explain why the apps created by Spark look so good and work so well.

I won't quote the whole thing, but the sections include "Foundational Principles", "Typographic Excellence", "Color Theory Application" and "Spatial Awareness". These honestly feel like a crash-course in design theory!

OK, I'll quote the full typography section just to show how much thought went into these:

Typographic Excellence

- Purposeful Typography: Typography should be treated as a core design element, not an afterthought. Every typeface choice should serve the app's purpose and personality.

- Typographic Hierarchy: Construct clear visual distinction between different levels of information. Headlines, subheadings, body text, and captions should each have a distinct but harmonious appearance that guides users through content.

- Limited Font Selection: Choose no more than 2-3 typefaces for the entire application. Consider San Francisco, Helvetica Neue, or similarly clean sans-serif fonts that emphasize legibility.

- Type Scale Harmony: Establish a mathematical relationship between text sizes (like the golden ratio or major third). This forms visual rhythm and cohesion across the interface.

- Breathing Room: Allow generous spacing around text elements. Line height should typically be 1.5x font size for body text, with paragraph spacing that forms clear visual separation without disconnection.

At this point we're not even a third of the way through the whole prompt. It's almost 5,000 words long!

Check out this later section on finishing touches:

Finishing Touches

- Micro-Interactions: Add small, delightful details that reward attention and form emotional connection. These should be discovered naturally rather than announcing themselves.

- Fit and Finish: Obsess over pixel-perfect execution. Alignment, spacing, and proportions should be mathematically precise and visually harmonious.

- Content-Focused Design: The interface should ultimately serve the content. When content is present, the UI should recede; when guidance is needed, the UI should emerge.

- Consistency with Surprise: Establish consistent patterns that build user confidence, but introduce occasional moments of delight that form memorable experiences.

The remainder of the prompt mainly describes the recommended approach for writing React apps in the Spark style. Some summarized notes:

- Spark uses Vite, with a

src/directory for the code. - The default Spark template (available in github/spark-template on GitHub) starts with an

index.htmlandsrc/App.tsxandsrc/main.tsxandsrc/index.cssand a few other default files ready to be expanded by Spark. - It also has a whole host of neatly designed default components in src/components/ui with names like

accordion.tsxandbutton.tsxandcalendar.tsx- Spark is told "directory where all shadcn v4 components are preinstalled for you. You should view this directory and/or the components in it before using shadcn components." - A later instruction says "Strongly prefer shadcn components (latest version v4, pre-installed in

@/components/ui). Import individually (e.g.,import { Button } from "@/components/ui/button";). Compose them as needed. Use over plain HTML elements (e.g.,<Button>over<button>). Avoid creating custom components with names that clash with shadcn." - There's a handy type definition describing the default

spark.API namespace:declare global { interface Window { spark: { llmPrompt: (strings: string[], ...values: any[]) => string llm: (prompt: string, modelName?: string, jsonMode?: boolean) => Promise<string> user: () => Promise<UserInfo> kv: { keys: () => Promise<string[]> get: <T>(key: string) => Promise<T | undefined> set: <T>(key: string, value: T) => Promise<void> delete: (key: string) => Promise<void> } } } }

- The section on theming leans deep into Tailwind CSS and the tw-animate-css package, including a detailed example.

- Spark is encouraged to start by creating a PRD - a Product Requirements Document - in

src/prd.md. Here's the detailed process section on that, and here's the PRD for my documentation app (calledPRD.mdand notsrc/prd.md, I'm not sure why.)

The system prompt ends with this section on "finishing up":

Finishing Up

- After creating files, use the

create_suggestionstool to generate follow up suggestions for the user. These will be presented as-is and used for follow up requests to help the user improve the project. You must do this step. - When finished, only return

DONEas your final response. Do not summarize what you did, how you did it, etc, it will never be read by the user. Simply returnDONE

Notably absent from the system prompt: instructions saying not to share details of the system prompt itself!

I'm glad they didn't try to suppress details of the system prompt itself. Like I said earlier, this stuff is the missing manual: my ability to use Spark is greatly enhanced by having read through the prompt in detail.

What can we learn from all of this?

This is an extremely well designed and implemented entrant into an increasingly crowded space.

GitHub previewed it in October and it's now in public preview nine months later, which I think is a great illustration of how much engineering effort is needed to get this class of app from initial demo to production-ready.

Spark's quality really impressed me. That 5,000 word system prompt goes a long way to explaining why the system works so well. The harness around it - with a built-in editor, Codespaces and GitHub integration, deployment included and custom backend API services - demonstrates how much engineering work is needed outside of a system prompt to get something like this working to its full potential.

When the Vercel v0 system prompt leaked Vercel's CTO Malte Ubl said:

When @v0 first came out we were paranoid about protecting the prompt with all kinds of pre and post processing complexity.

We completely pivoted to let it rip. A prompt without the evals, models, and especially UX is like getting a broken ASML machine without a manual

I would love to see the evals the Spark team used to help iterate on their epic prompt!

Spark features I'd love to see next

I'd love to be able to make my Spark apps available to unauthenticated users. I had to figure out how to build and deploy the app separately just so I could link to it from this post.

Spark's current deployment system provides two options: just the app owner or anyone with a GitHub account. The UI says that access to "All members of a selected organization" is coming soon.

Building and deploying separately had added friction due to the proprietary

@github/sparkpackage. I'd love an open source version of this that throws errors about the APIs not being available - that would make it much easier to build the app independently of that library.My biggest feature request concerns that key/value API. The current one is effectively a global read-write database available to any user who has been granted access to the app, which makes it unsafe to use with the "All GitHub users" option if you care about your data being arbitrarily modified or deleted.

I'd like to see a separate key/value API called something like this:

spark: { userkv: { keys: () => Promise<string[]> get: <T>(key: string) => Promise<T | undefined> set: <T>(key: string, value: T) => Promise<void> delete: (key: string) => Promise<void> } }

This is the same design as the existing

kvnamespace but data stored here would be keyed against the authenticated user, and would not be visible to anyone else. That's all I would need to start building applications that are secure for individual users.I'd also love to see deeper integration with the GitHub API. I tried building an app to draw graphs of my open issues but it turned there wasn't a mechanism for making authenticated GitHub API calls, even though my identity was known to the app.

Maybe a

spark.user.githubToken()API method for retrieving a token for use with the API, similar to howGITHUB_TOKENworks in GitHub Actions, would be a useful addition here.Pony requests aside, Spark has really impressed me. I'm looking forward to using it to build all sorts of fun things in the future.

You are only seeing the long-form articles from my blog. Subscribe to /atom/everything/ to get all of my posts, or take a look at my other subscription options.

-

🔗 News Minimalist 🐢 UN court declares healthy environment a human right + 7 more stories rss

In the last 2 days ChatGPT read 57170 top news stories. After removing previously covered events, there are 8 articles with a significance score over 5.9.

[6.4] Healthy environment is a human right, top UN court rules in landmark climate case —irishtimes.com(+74)

The International Court of Justice ruled that a clean, healthy environment is a fundamental human right, requiring states to address harm from their carbon emissions.

This landmark decision clarifies states' obligations to combat climate change, emphasizing intergenerational equity and holding major emitters accountable for inaction, potentially leading to reparations.

The non-binding opinion is seen as a turning point in international climate law, potentially enabling future legal actions.

[6.3] Gene editing stops malaria transmission in Asian mosquitoes —today.ucsd.edu(+3)

Scientists have engineered mosquitoes to halt malaria transmission by altering a single gene, a breakthrough that could combat the deadly disease.

This CRISPR-based system modifies a protein in mosquitoes, preventing malaria parasites from reaching their salivary glands and thus stopping transmission to humans. The genetic change is designed to spread through mosquito populations.

Researchers tested the system in Anopheles stephensi mosquitoes, a primary malaria vector in Asia, successfully blocking parasite infection and spread.

Highly covered news with significance over 5.5

[6.0] US Olympic Committee bars transgender women from women's sports — ctvnews.ca (+53)

[6.1] US and Japan agree on a trade deal — cnbc.com (+327)

[5.8] More than 100 humanitarian groups warn of mass starvation in Gaza — bbc.com (+191)

[6.1] Brazil joins ICJ genocide case against Israel — reuters.com [$] (+5)

[5.6] UK and India sign historic trade deal — theguardian.com (+107)

[5.8] Trump unveiled AI Action Plan, cutting regulations — apnews.com (+88)

Thanks for reading!

— Vadim

You can customize this newsletter with premium.

-

🔗 r/reverseengineering New Advanced Stealer (SHUYAL) Targets Credentials Across 19 Popular Browsers rss

submitted by /u/CyberMasterV

[link] [comments] -

🔗 r/wiesbaden MTG Wiesbaden ~ Die Gruppe für Magic Spieler und die, die es werden wollen! rss

Suchst du nach einer Magic-Gemeinschaft in Wiesbaden? Dann bist du hier genau richtig!

Die Whatsapp-Gruppe wurde im Februar 2022 von Mangamafia gegründet, um Magic The Gathering Spieler aus Wiesbaden und Umgebung zusammenzubringen.

Hier ist, was du in unserer Gruppe finden kannst:

- Eine freundliche und einladende Community, in der wir uns über Karten, Decks oder die neueste Standard Meta austauschen. Die generelle Entwicklung von Magic wird ebenfalls diskutiert.

- Jeden Samstag 16 Uhr MTG Standard-Showdown in Darmstadt. Wir fahren da entweder mit dem Zug oder bilden Fahrgemeinschaften.

- Jeden Mittwoch gibt es im Glitchless in Mainz 17 Uhr abwechselnd Draft und Standard Showdown.

- Treffen zum Spielen, Tauschen und Spaß haben.

- Unterstützung für Anfänger: Wir helfen gerne dabei, die Grundlagen des Spiels zu erlernen und versorgen euch auch mit euren ersten Karten. Gelegentlich gibt es Learn to Play Events wo das Spiel beigebracht oder beim Deckbau unterstützt wird. Das nächste ist am 16. August im Glitchless in Mainz.

- Gelegentlich organisieren wir privat kleine Turniere oder Drafts, um unsere Fähigkeiten zu testen.

- Wir spielen hauptsächlich Standard, aber es gibt auch Spieler, die sich für schwierigere Formate begeistern.

- Private Events interessieren uns, obwohl die Organisation manchmal eine Herausforderung darstellt. 1-2 Events sind für dieses Jahr auf jeden noch geplant.

Mangamafia strebte danach, mehr Magic-Events anzubieten, hat sich in Wiesbaden aber aus dem Spielbetrieb zurückgezogen. Wir haben schon viele Orte zum ausweichen etabliert. Allen voran JK-Entertainment in Darmstadt 16 Uhr jeden Samstag und der Glitchless jeden Mittwoch um 16 Uhr.

Also im Endeffekt ist das eine Wiesbaden Gruppe, die von Mangamafia ins Leben gerufen wurde, aber nicht mehr so viel mit Mangamafia zu tun hat. Tatsächlich sind die Store Championships der verschiedenen TCG-Läden unser Hauptaugenmerk geworden. Durch die neuen Sets alle 2 Monate sind diese sehr regelmäßig geworden.

Hier ist der Link zu Mangamafia: https://manga-mafia.de/store-wiesbaden

Hier ist der Link zu JK-Entertainment: https://jk-entertainment.de/

Hier ist der Link zum Glitchless: https://glitchless.de/

MTG Subreddit: r/spikes

Der Link für die Whatsappgruppe wird hier nur auf Anfrage zur Verfügung gestellt, da es Probleme mit Spam gab. Ihr könnt mir auch eine Nachricht schicken.

submitted by /u/aqua995

[link] [comments] -

🔗 @trailofbits@infosec.exchange [https://mailchi.mp/trailofbits/trail-of-bits-tribune- mastodon

-

🔗 @trailofbits@infosec.exchange New Trail of Bits Tribune: Our AIxCC finals submission, how we exposed mastodon

New Trail of Bits Tribune: Our AIxCC finals submission, how we exposed critical flaws in Go's built-in parsers that can enable authentication bypass and data exfiltration from production systems, and 14 new security reviews.

Read it here: https://mailchi.mp/trailofbits/trail-of-bits-tribune- july-2025 -

🔗 @HexRaysSA@infosec.exchange IDA 9.2 (coming soon... ) adds full support for the TriCore TC1.8 mastodon

IDA 9.2 (coming soon... ) adds full support for the TriCore TC1.8 architecture, including over 50 new instructions and updated chipset definitions. Ideal for automotive and railway firmware analysis.

-

🔗 r/reverseengineering Development Journey on Game Decompilation Using AI rss