- ↔

- →

- Small Language Models are the Future of Agentic AI

- Hex-Rays Microcode API vs. Obfuscating Compiler – Hex Rays

- VibeTunnel: Turn Any Browser into Your Mac's Terminal | Peter Steinberger

- Fil-C Manifesto

- 30 Days of Malware Analysis - What trends can be observed? – STRÖMBLAD

- July 05, 2025

-

🔗 r/reverseengineering Psi-Ops: The Mindgate Conspiracy Mod Tools (PC) - .w32 format [Visual Studio] rss

I'm looking for additional aid for the creation of mod tools for this freeware game. From communing with Watto's team for the Game Extractor aswell as ResHax, the .w32 format appears to be less of a game archive and more of a large file with a bunch of pointers, and it's apparently quite the challenge to navigate. One of ResHax's regulars is taking his best shot at it, but he'd appreciate someone with Visual Studio experience to aid in its development. The entire game is easily accessible via links in the forum aswell as any of his work.

I'm also game to pay for any necessary assistance with anything pertaining to the .w32 format or the mod tools themselves (via Paypal or preferred payment gateway)!

If you're interested feel free to reply here, DM me, or join in on the ResHax thread.

submitted by /u/Gagnetar

[link] [comments] -

🔗 r/wiesbaden ESWE Ladekarte - Warum existiert die? rss

Das Laden mit einer Lade Karte bei ESWE (Lokaler Stromversorger in Wiesbaden + Umgebung) kostet mehr als das AD-HOC Laden. Die Lade Karte kostet auch noch monatliche Gebühren?

https://www.eswe-versorgung.de/mobilitaet/e-mobilitaet/oeffentliches-laden/

AD-HOC Laden = 46/56 Cent/kWH (AC/DC)---

https://www.eswe-versorgung.de/mobilitaet/e-mobilitaet/eswe-lade-karte.html

LadeKarte 49/59 Cent/kWH (AC/DC) + 6,49€ pro MonatSiehe auch Chargeprice:

https://www.chargeprice.app/?poi_id=c648d97b-f4e1-4b25-8e17-cc13dec39302&poi_source=chargepriceGibt es dafür einen Grund?

submitted by /u/Jazzlike-Specific-44

[link] [comments] -

🔗 The Pragmatic Engineer Software engineering with LLMs in 2025: temperature check rss

How are devs using AI tools at Big Tech and startups, and what do they actually think of them? This was the topic of my annual conference talk, in June 2025, in London, at LDX3 by LeadDev.

At

LDX3 by LeadDev

At

LDX3 by LeadDevTo research, I talked with devs at:

- AI dev tools startups :Anthropic, Cursor, Windsurf

- Big Tech: Google and Amazon

- AI startups:incident.io and a biotech AI startup

- Vendor-independent software engineers:Kent Beck, Birgitta Boeckeler, Simon Willison, Armin Ronacher and Peter Steinberger,

The recording of the talk is out - if you were not at the conference but have 25 minutes, you can watch it here

And if you don't: I wrote an article that summarizes the talk and adds more details: read it here.

My takeaway is that these tools are spreading; they will probably change how us, engineers, build software; but we still don't know exactly how. Now is the time to experiment - both with an open, but also a critical mindset!

-

🔗 r/wiesbaden Am 11.07. gibt es gute Musik, kühle Drinks und Sommer Vibes an der Vogeltränke (vor der Kreativfabrik) für alle die auf House, Disco und Techno stehen! Das ganze ist umsonst und fängt gegen 19:00 an! rss

submitted by /u/Dub_Tee_

[link] [comments] -

🔗 jesseduffield/lazygit v0.53.0 release

This is the first lazygit release after Jesse passed the maintainership to me (@stefanhaller), and I'm excited (and a little bit nervous 😄) about it.

No big new features this time, but lots of smaller quality-of-life improvements. The one that makes the biggest difference for me is an improved hunk selection mode in the staging view (in case you didn't know, you can switch from the normal line selection mode to hunk mode by pressing

a). This now works a bit more fine-grained, by selecting groups of added or deleted lines rather than entire hunks, which often consist of several such groups. With this change I find that I prefer hunk mode over line mode in most cases, so I added a user config to switch to hunk automatically upon entering the staging view; it is off by default, but I encourage you to enable it (gui.useHunkModeInStagingView) to see if you like it as much as I do. Feedback about this is welcome; please comment on the PR if you have any.The detailed list of all changes follows:

What's Changed

Enhancements 🔥

- Add option to disable warning when amending last commit by @johnhamlin in #4640

- Add bold style for border by @aidancz in #4644

- Add credential prompts for PKCS11-based SSH keys by @Jadeiin in #4646

- Show annotation information for selected tag by @stefanhaller in #4663

- Show stash name for selected stash by @stefanhaller in #4673

- Auto-stash modified files when cherry-picking or reverting commits by @stefanhaller in #4683

- Move to next stageable line when adding a line to a custom patch by @stefanhaller in #4675

- Improve hunk selection mode in staging view by @stefanhaller in #4684

- Add user config to use hunk mode by default when entering staging view by @stefanhaller in #4685

Fixes 🔧

- Fix stash operations when branch named 'stash' exists by @ChrisMcD1 in #4641

- Fix moving a custom patch from the very first commit of the history to a later commit by @stefanhaller in #4631

- Fix DEFAULT_REMOTE_ICON character code by @bedlamzd in #4653

- Show GPG error before entering commit editor when rewording non-latest commits by @m04f in #4660

- Fix branch head icon appearing at head commit when a remote or tag exists with the same name as the current branch by @stefanhaller in #4669

- Fix applying custom patches to a dirty working tree by @stefanhaller in #4674

- Collapse selection after deleting a range of branches or stashes by @nileric in #4661

Maintenance ⚙️

- Instantiate mutexes by value by @stefanhaller in #4632

- Bump github.com/cloudflare/circl from 1.6.0 to 1.6.1 by @dependabot in #4633

- Update linter by @stefanhaller in #4671

- Some code cleanups to the "discard file changes from commit" feature by @stefanhaller in #4679

- Change Refresh to not return an error by @stefanhaller in #4680

Docs 📖

- Fix formatting of a keyboard shortcut in the README.md by @DanOpcode in #4678

I18n 🌎

- Update translations from Crowdin by @stefanhaller in #4686

New Contributors

- @johnhamlin made their first contribution in #4640

- @aidancz made their first contribution in #4644

- @bedlamzd made their first contribution in #4653

- @Jadeiin made their first contribution in #4646

- @m04f made their first contribution in #4660

- @DanOpcode made their first contribution in #4678

- @nileric made their first contribution in #4661

Full Changelog :

v0.52.0...v0.53.0 -

🔗 Arne Bahlo Reclaiming my attention rss

“Like fingers pointing to the moon, other diverse disciplines from anthropology to education, behavioral economics to family counseling, similarly suggest that the skillful management of attention is the sine qua non of the good life and the key to improving virtually every aspect of your experience.” — Winifred Gallagher

Our attention is being stolen.

We’re slowly losing the ability to concentrate, not only because of TikTok, but also because we constantly have access to easy consumption. It’s digital fast food.When I started to notice this on myself, I started with the naïve approach and deleted all time sink1 apps like YouTube or Instagram. This worked for a day or two until I either got bored or found another reason to re-install.

Then I moved to Apple Screen Time. This works for a bit, but that “15 minutes more” button starts to become muscle memory very quickly. Even apps like one sec got deleted because they’re annoying (that’s the idea, I know).

In January 2024 I went 7 days with only my Apple Watch, leaving my iPhone at my desk at all times. It was doable, but very impractical.

None of this worked

In early 2025 I sold my Apple Watch and bought a Casio DW-5600BB-1. This had a large impact as I would no longer get buzzed on my wrist for every notification2.

Then I deleted my Instagram account and the YouTube, Mastodon and Bluesky apps. Yes, a lot of my friends are on Instagram—but I mostly watched Reels anyway! I do miss Mastodon. I now use YouTube in Safari with shorts blocked. This creates enough friction for me to watch videos intentionally (most of the time). It also lets me use cool extensions like SponsorBlock and DeArrow.

And when Apple Intelligence (if you can call it that) got to Europe, I turned on the new Reduce Interruptions focus and never turned it off. This works great because it randomly lets things through, but blocks most of it.

I tried a dumb phone3, but this was way too much friction—I need a usable phone for renting a bike, getting my parcels, etc.

In March I bought a Bullet Journal and started tracking my tasks there instead of my phone. I also started journalling and tracking my habits and I love the analog lifestyle—even my running plan is analog!

The combination of all of this is working out pretty well so far: My phone is mostly boring. It doesn’t have any exciting apps. It doesn’t spark joy. Because of this, I’m spending my time a lot more intentional.

That being said, sometimes I spend a little too much time on YouTube (at least that’s longform content) and I should probably delete the Slack app to not check the work chat when I’m not at my desk.

We deserve

more humane tech

I’m happy to see the first smartphone vendors start to add physical switches to their phones to disable connectivity or limit apps4 and maybe I’ll switch to one of them one day.

This is not a political post, but if it was, it would talk about the obscene power of big tech and the necessity to regulate and break up. It would appeal on you to rethink your investment into these services, services that are actively spying on you, services that exploit your mental health for the sake of raising shareholder value.

Now go, touch some grass5. 🌱

-

Knapp, J. & Zeratsky, J. (2018). Make Time: How to focus on what matters every day. ↩

-

Yes, you can turn all notifications off. I didn’t. ↩

-

Apparently there’s an app for that ↩

-

-

- July 04, 2025

-

🔗 ryoppippi/ccusage v15.3.0 release

🎯 Featured Sponsor

Thank you to our sponsor@GregBaugues (/month) for supporting ccusage development!

Check out these 47 Claude Code ProTips from Greg Baugues.

95k+ views and growing! 🚀

🚀 Features

- Add --color and --no-color CLI flags for output control (doc only) - by @gfx and Claude in #201 (dd341)

- Add Claude usage limit reset time tracking and display - by @salala01 in #219 (b7171)

- Add Featured Sponsor section for Greg Baugues Claude Code ProTips - by @ryoppippi in #252 (56f08)

- Improve Featured Sponsor section layout and sizing - by @ryoppippi (9c49f)

🐞 Bug Fixes

- Change OGP image from SVG to PNG for better social media compatibility - by @ryoppippi (7d6f1)

- Handle file read errors gracefully in live monitor - by @warpdev in #202 (e3a3d)

- Include cache tokens in live usage token count calculations - by @tifoji in #193 (3190d)

- live-monitor : Correct Result.try usage for async file operations - by @ryoppippi (416b0)

[View changes on

GitHub](https://github.com/ryoppippi/ccusage/compare/v15.2.0...v15.3.0)

-

🔗 News Minimalist Japan to begin world-first deep-sea mining trial + 9 more stories rss

In the last 2 days ChatGPT read 56799 top news stories. After removing previously covered events, there are 10 articles with a significance score over 5.9.

[5.9] Japan will extract deep-sea minerals in world-first trial —dawn.com(+2)

Japan will begin a "world first" deep-sea extraction of rare earth minerals in January, aiming to secure resources vital for technology.

The test will involve the Chikyu drilling vessel retrieving sediment from 5,500 meters deep near Minami Torishima. The mission, lasting about three weeks, seeks to test equipment, with an estimated 35 tonnes of mud extracted, potentially containing rare earth minerals.

This move follows Japan's pledge to collaborate with the US, India, and Australia on mineral supply. Deep-sea mining faces environmental concerns and geopolitical tensions, especially with China's export controls on rare earths.

[6.0] Gene therapy reverses genetic deafness in weeks —independent.co.uk(+3)

A new gene therapy injection has shown the potential to reverse hearing loss in weeks, according to a recent study.

The clinical trial, published in Nature Medicine, involved injecting a healthy copy of the OTOF gene into the inner ear of ten participants with genetic deafness. All participants experienced improved hearing, with a seven-year-old regaining almost full hearing.

Researchers noted the therapy was safe and well-tolerated, and are now working on treatments for other genetic causes of deafness.

Highly covered news with significance over 5.5

[6.3] US allows chip software sales to China again — cnbc.com (+19)

[6.1] Dutch intelligence: Russia uses chemical weapons in Ukraine — yle.fi (Swedish) (+18)

[6.0] NASA finds third interstellar comet entering our solar system — theconversation.com (+86)

[5.9] US judge blocks Trump's US-Mexico border asylum ban — dw.com (+53)

[5.7] US Congress passes Trump's controversial mega-bill — bbc.com (+353)

[5.5] Austria resumes deportations to Syria after fifteen years — theguardian.com (+11)

[5.6] Meta's chatbots message users first to boost engagement — techcrunch.com (+4)

[5.7] Scientists sequenced ancient Egyptian's genome — bbc.com (+14)

Thanks for reading!

— Vadim

You can track significant news in your country with premium.

-

🔗 vitali87/code-graph-rag v0.0.3-beta.1 release

What's Changed

- Feat/add scala java by @vitali87 in #10

- Feat/local models by @vitali87 in #12

- Fix: Use public execute_write method and correct pyproject.toml and redundancy by @Mirza-Samad-Ahmed-Baig in #14

- Fix: Use public execute_write method and correct pyproject.toml by @Mirza-Samad-Ahmed-Baig in #13

- feat: write and edit files to the codebase by @vitali87 in #16

- Fix/security confine to dir by @vitali87 in #17

- feat: better command line experience by @vitali87 in #18

- feat: add shell command execution by @vitali87 in #19

- fix: include tests for dev by @vitali87 in #20

- feat: tables files and multimodality by @vitali87 in #21

- feat: export KG to json and other stuff by @vitali87 in #22

- feat: add external image support for document analysis by @vitali87 in #23

- Feat/commands order by @vitali87 in #24

- feat: Add multiline input with Ctrl+J submit and Ctrl+C handler by @vitali87 in #25

- fix: rm unnec pdf file by @vitali87 in #26

- fix: simplify - remove show_repo command and related helper functions by @vitali87 in #27

- feat: add CALL relationship by @vitali87 in #28

- fix: add CALLS relationship to graph schema by @vitali87 in #30

- optimize: many things by @vitali87 in #29

New Contributors

- @Mirza-Samad-Ahmed-Baig made their first contribution in #14

Full Changelog :

v0.0.2...v0.0.3 -

🔗 idursun/jjui v0.8.12 release

What's Changed

Improvements

Revset

- Completions show on the second line as a list now. You can use

tab/shift+tabto cycle forward/backward. - Loads and adds

revset-aliasesdefined in your jj config to the list of completions. - Keeps the history. You can use

up/downto cycle through revset history. History is only available during the session. (i.e. it's not persisted)

Squash

Got two new modifiers:

- You can use

efor keeping the source revision empty (--keep-emptied) - You can use

ifor running the squash operation in interactive mode (--interactive) - Squash key configuration has changed:

[keys.squash]mode = ["S"] keep_emptied = ["e"] interactive = ["i"]

Rebase

Revisions to be moved are marked with

movemarker, and get updated according to the target. (i.e. branch/source will mark all revisions to be moved according to the target)Minor

- Details: Added absorb option to absorb changes in the selected files.

- Help window is updated to have 3-columns now.

- Changed auto refresh to proceed only when there's an actual change.

- JJ's colour palette is loaded from jj config at start up and applied to change_id, rest, diff renamed, diff modified, diff removed. This is the first step towards implementing colour themes for

jjui.

Fixes

- Revisions view don't get stuck in

loadingstate when the revset don't return any results. - Selections should be kept as is across auto refreshes.

- Fixed various issues about bookmark management where delete bookmark menu items were not shown, and track/untrack items were shown incorrectly under certain circumstances #155 #156

- Double width characters should not cause visual glitches #138

- Fixed visual glitches when extending graph lines to accommodate graph overlays

Contributions

- gitignore: add

resultfornix build .by @ilyagr in #133 - CI and Nix: make

nix flake checkbuild the flake by @ilyagr in #132 - build(nix): add git version to --version by @teto in #150

- doc: Remove a duplicated maintainer mention by @Adda0 in #153

- build(nix): allow building flake when

self.dirtyRevis not defined by @ilyagr in #152 - fixed display of empty revsets (#151) by @Gogopex in #154

- fix: refresh SelectedFile on ToggleSelect by @IvanVergiliev in #158

New Contributors

- @teto made their first contribution in #150

- @Gogopex made their first contribution in #154

- @IvanVergiliev made their first contribution in #158

Full Changelog :

v0.8.11...v0.8.12 - Completions show on the second line as a list now. You can use

-

- July 03, 2025

-

🔗 r/reverseengineering Need an experienced eye on this beginner hacking project rss

Hope you don’t mind the message. I’ve been building a small Android app to help beginners get into ethical hacking—sort of a structured learning path with topics like Linux basics, Nmap, Burp Suite, WiFi hacking, malware analysis, etc.

I’m not here to promote it—I just really wanted to ask someone with experience in the space:

Does this kind of thing even sound useful to someone starting out?

Are there any learning features or topics you wish existed in one place when you were learning?

If you’re curious to check it out, here’s the Play Store link — no pressure at all: 👉 Just wanted to get honest thoughts from people who actually know what they're talking about. Appreciate your time either way!

submitted by /u/Hefty-Clue-1030

[link] [comments] -

🔗 r/reverseengineering Everyone's Wrong about Kernel AC rss

I've been having a ton of fun conversations with others on this topic. Would love to share and discuss this here.

I think this topic gets overly simplified when it's a very complex arms race that has an inherent and often misunderstood systems-level security dilemma.

submitted by /u/Outrageous-Shirt-963

[link] [comments] -

🔗 sacha chua :: living an awesome life June 2025: playdates, splash pads, sewing, Stardew rss

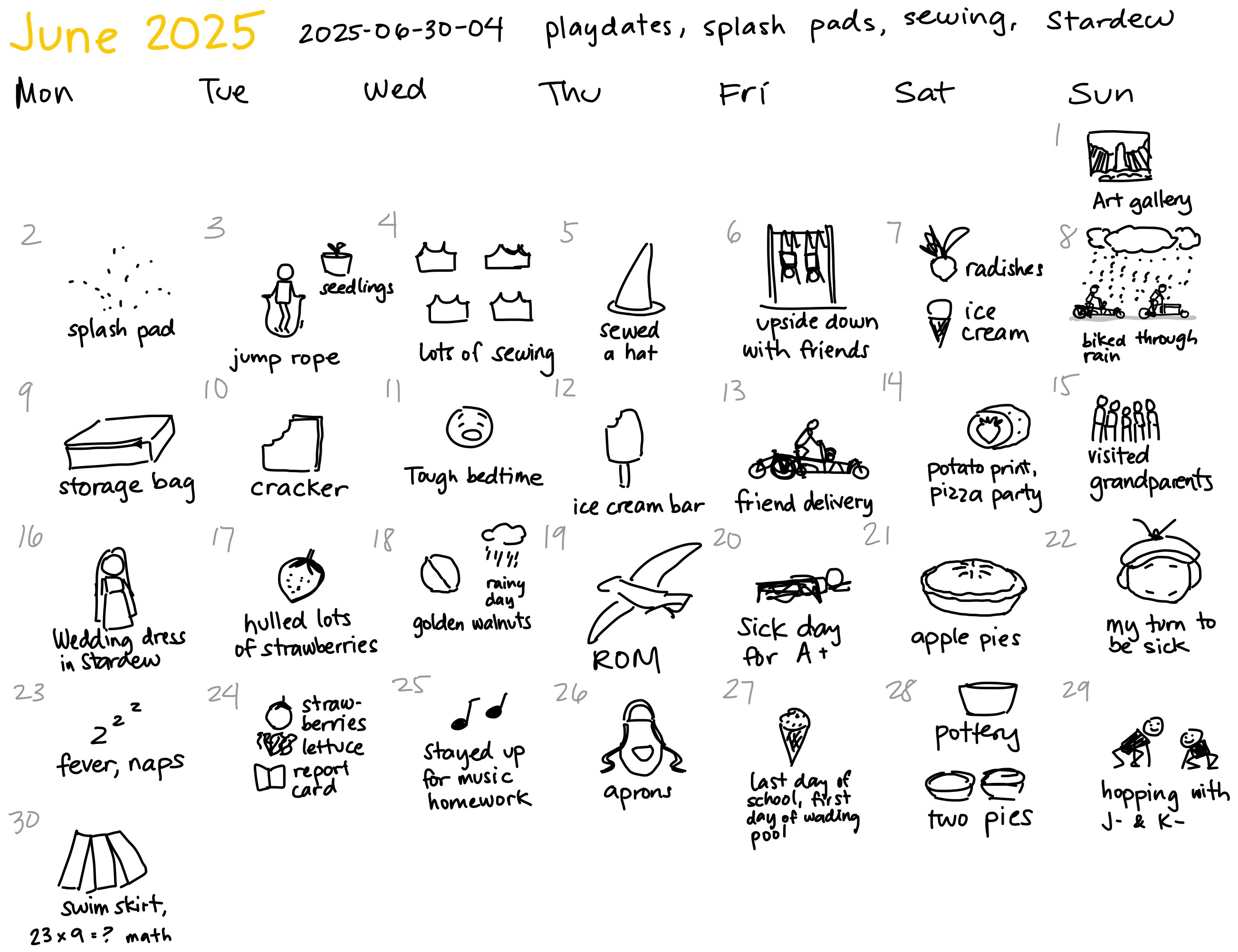

June 2025: 🖼️💦🪴🪡🪡🙃🍦🚲🪡🍪😴🍦🚲🥔👴👰🍓🌧️🦕🌡🥧😷🌡🍓🎵🪡🏊🏺🐸🪡Text from sketchJune 2025 2025-06-30-04 playdates, splash pads, sewing, Stardew

- 🖼️ art gallery

- 💦 splash pad

- 🪴 jump rope, seedlings

- 🪡 lots of sewing

- 🪡 sewed a hat

- 🙃 upside down with friends

- 🍦 radishes, ice cream

- 🚲 biked through rain

- 🪡 storage bag

- 🍪 cracker

- 😴 tough bedtime

- 🍦 ice cream bar

- 🚲 friend delivery

- 🥔 potato print, pizza party

- 👴 visited grandparents

- 👰 wedding dress in Stardew

- 🍓 hulled lots of strawberries

- 🌧️ rainy day, golden walnuts

- 🦕 ROM

- 🌡 sick day for A+

- 🥧 apple pies

- 😷 my turn to be sick

- 🌡 fever, naps

- 🍓 strawberries, lettuce, report card

- 🎵 stayed up for music homework

- 🪡 aprons

- 🏊 last day of school, first day of wading pool

- 🏺 pottery, two pies

- 🐸 hopping with J- & K-

- 🪡 swim skirt, 23x9= math

A+ finished virtual grade 3 and is now on summer break. I let her decide between mostly structured and mostly unstructured time. She picked mostly unstructured time, with one set of private swimming lessons at an outdoor pool and one week-long afternoon summer camp focused on pottery wheels. The rest of the time is for hanging out at splash pads, wading pools, and swimming pools, often with A+'s friends. When it's too hot even for that, we stay inside. There haven't been as many "I'm booored!"s as I expected. I think dealing with school gave her a lot of practice in managing boredom and coming up with her own activities, which is fantastic. It's important to be able to check in with herself and figure out what she's curious about, what she wants to do, and to know that she can come up with that instead of needing someone else to direct her day. Sometimes A+ uses Claude to help her write stories, sometimes she builds LEGO, sometimes she plays Minecraft or Stardew Valley, and sometimes she watches Clone Wars. Sometimes we tag along on W-'s Bike Brigade deliveries, so that's nice. Sometimes she helps me with sewing by doing straight seams, winding the bobbin, or threading the needle. She's been cubing again, packing a 3x3 Rubik's cube when she thinks there might be some waiting time. I still have the timer app on my phone, so she can check how she's doing. This feels like a good kind of busy: not externally imposed, but intrinsically motivated. Not regimented, but going with the flow.

A+ has lots of ideas for things to sew based on things she wants to wear or use, and is very much into having us both wear matching outfits. It turns out that I fit into kid-sized Crocs, so it's easy to get matching colours there too. The pendulum of childhood, I guess. We're currently on the "let's match" side, and then we'll swing over to individuation, and then we'll swing back, and then further out to individuation, and so on. I love that we can explore this through our clothes, shoes, and interests. Some days she wants to be just like me, and almost physically tries to occupy the same space. (Cuddles are great! I know this opportunity is time-limited.) Other days she grumps at me and nothing I say is right. It's great to be able to not take it personally. It's all part of healthy development.

We're making quick progress through my fabric stash. I've been making clothes: mostly training bras, skorts, and swim skirts out of Spandex so that we can wear it straight into the pool and out again. I've even been able to make a few clothes for me instead of just for her. I used godets to turn last year's swim skirt for A+ into a swim skirt for me, and I added in-seam pockets. Pockets are great for stashing goggles, glasses, and diving toys. I want pockets in everything now. It's nice getting the hang of more of these little techniques, especially since it means I can turn more scraps and outgrown clothes into new things. I sewed a large zippered liner for W-'s drawer so that we could protect out-of-season wool clothes and blankets from moths. I used the leftover canvas to make a bag for A+ so that she could shop for snacks independently, since the reusable bags from the store dragged on the ground when she held them in her hand. This one is just the right size. It's great to be able to make things that fit. I also made aprons for her and one of her friends, whom we treated to a pottery class. A+ enjoyed potato-printing her bag and apron at the Bike Brigade x Not Far From the Tree pizza party at the park, which was a lot of fun. It might be interesting to pick up more paintable/dyeable fabric and some fabric paint so that we can make our own designs.

A+ was briefly sick with a fever, and then I had a sore throat and a fever too. Now no one has a fever, but I still have a persistent cough. We think we might have picked something up at the party. Even though it was outdoors it was a bit crowded, lots of people were talking, and we hadn't worn our masks. Oh well, just gotta do better next time and mask up at big events. At least I'm still testing negative for COVID. I'm masking at home so that W- doesn't get sick, and generally taking it easy. We got a membership for the ROM, but the annoyance of this cough is making me extra grumpy about crowds and indoor things, so it might be a while before we're back. Plenty to do outdoors now that the weather is warm and her friends play outside.

I probably should sleep more, but I've been staying up to play Stardew Valley, which you'd be able to tell from my time records. I like the game. Even the kiddo is learning to slow down and take care of her farm. Sometimes we play co-op, and sometimes we work on our own playthroughs. I have a fairly built-up standard farm playthrough where I let A+ take over Ginger Island. I'm proud of how I successfully didn't grump when she decided to rip up most of my starfruit plants there and then ended up not replacing them with anything. Not even a blip of grumpiness. I already had more money than I felt like spending, thanks to the ancient fruit winery I'd set up. Aside from cooking and puttering around with the sewing machine, A+ also liked giving my character relationship advice. She encouraged me to marry Emily, pronouncing her the most compatible. (She married Elliott in her own playthrough.) After I gave Emily the mermaid pendant and came back to the farmhouse, A+ had set up a mannequin with a full wedding outfit as a gift for me. She was proud of gathering all the materials needed and sewing the virtual outfit herself. I love that the sandbox nature of the game lets her come up with her own ideas and make things happen.

I also have a four corners farm with remixed bundles where I've just completed the community centre and I'm now slowly collecting hardwood for the boat to Ginger Island. I've developed an appreciation for the fishing minigame that I used to avoid. It's a great way to get treasure. Anyway, Stardew is a pleasant enough way to spend little bits of time here and there, and to relax after A+'s bedtime. It's encouraging to see that I could actually find plenty of discretionary time in my day for playing, and I can use that time for other things once this hyperfocus passes.

Our real-life garden is doing all right, too. Most of the remaining radishes have bolted, although some of the larger ones are still growing well. In spring I gave A+ a bunch of seed packets and let her plant entirely at her own discretion. I've been having fun figuring out how to identify and manage the results, thinning out the ones that are definitely not what we're looking for in that space or that just need a little more spacing. We get a lot of volunteer tomatoes, perilla, goosefoot, wood sorrel, and clover. I've been putting those in the compost to make room for the marigolds and poppies that I recognize from the seed packet pictures. I'm learning to identify other plants as they grow. It's fun letting A+ try whatever she likes and then figuring out how to work with that. It's also fun blending the real-life world and the virtual world. We make the sound effect from Stardew Valley when we uproot our radishes and hold them up above our heads.

Taking advantage of those last days of predictable focus time while virtual school was in session, I got the ball rolling for EmacsConf 2025 with the call for participation. I also enjoyed attending the virtual Emacs Berlin meetup and taking notes. I wrote a bunch of blog posts, too.

We'll see how my focused time settles down now that we're on summer schedule. It turns out that I still have plenty of free time. The daytime part is just more interruptible now because I want to be ready to do something with A+ or head out the door when A+ expresses an interest in going to a playdate or a pool. I still want to get my own stuff done instead of feeling like I'm on standby, so it's great that my notes make it easier for me to make progress in stop-and-go segments. I want her to feel like I'm happy to spend time with her instead of being distracted by an interrupted task. I also want her to see how I choose things to do with my time and how I use notes to help me work around the limitations of my brain and my attention. It's an interesting challenge balancing between occupation and flexibility. I want her to enjoy unstructured time and to be able to shift between solo interests and shared time according to the rhythms of her energy.

July is probably going to be about hanging out with A+ near some kind of water. I like this approach of trusting her to manage her time and attention, letting her take the initiative when it comes to going out and playing with friends or swimming in the pool. In the meantime, there's time for me to write and play.

Blog posts

- Emacs:

- Working on the plumbing in a small web community

- Using Org Mode, Emacs Lisp, and TRAMP to parse meetup calendar entries and generate a crontab

- Run source blocks in an Org Mode subtree by custom ID

- Transforming HTML clipboard contents with Emacs to smooth out Mailchimp annoyances: dates, images, comments, colours

- Thinking about time travel with the Emacs text editor, Org Mode, and backups

- Life:

- Emacs News:

Sketches

Time

Category Previous month % This month % Diff % h/wk Diff h/wk Discretionary - Play 3.7 14.6 10.9 23.7 18.2 Discretionary - Productive 12.5 14.8 2.3 24.0 3.8 Personal 10.7 11.5 0.8 18.7 1.4 Discretionary - Family 0.2 0.3 0.1 0.5 0.2 Business 2.0 0.9 -1.1 1.4 -1.9 Unpaid work 5.7 4.5 -1.2 7.3 -2.0 Sleep 31.3 27.3 -4.0 44.3 -6.7 A+ 33.9 26.3 -7.7 42.7 -12.9 You can comment on Mastodon or e-mail me at sacha@sachachua.com.

-

🔗 Rust Blog Stabilizing naked functions rss

Rust 1.88.0 stabilizes the

#[unsafe(naked)]attribute and thenaked_asm!macro which are used to define naked functions.A naked function is marked with the

#[unsafe(naked)]attribute, and its body consists of a singlenaked_asm!call. For example:/// SAFETY: Respects the 64-bit System-V ABI. #[unsafe(naked)] pub extern "sysv64" fn wrapping_add(a: u64, b: u64) -> u64 { // Equivalent to `a.wrapping_add(b)`. core::arch::naked_asm!( "lea rax, [rdi + rsi]", "ret" ); }What makes naked functions special — and gives them their name — is that the handwritten assembly block defines the entire function body. Unlike non- naked functions, the compiler does not add any special handling for arguments or return values.

This feature is a more ergonomic alternative to defining functions using

global_asm!. Naked functions are used in low-level settings like Rust'scompiler-builtins, operating systems, and embedded applications.[](https://blog.rust-lang.org/2025/07/03/stabilizing-naked-functions/#why-

use-naked-functions) Why use naked functions?

But wait, if naked functions are just syntactic sugar for

global_asm!, why add them in the first place?To see the benefits, let's rewrite the

wrapping_addexample from the introduction usingglobal_asm!:// SAFETY: `wrapping_add` is defined in this module, // and expects the 64-bit System-V ABI. unsafe extern "sysv64" { safe fn wrapping_add(a: u64, b: u64) -> u64 } core::arch::global_asm!( r#" // Platform-specific directives that set up a function. .section .text.wrapping_add,"ax",@progbits .p2align 2 .globl wrapping_add .type wrapping_add,@function wrapping_add: lea rax, [rdi + rsi] ret .Ltmp0: .size wrapping_add, .Ltmp0-wrapping_add "# );The assembly block starts and ends with the directives (

.section,.p2align, etc.) that are required to define a function. These directives are mechanical, but they are different between object file formats. A naked function will automatically emit the right directives.Next, the

wrapping_addname is hardcoded, and will not participate in Rust's name mangling. That makes it harder to write cross-platform code, because different targets have different name mangling schemes (e.g. x86_64 macOS prefixes symbols with_, but Linux does not). The unmangled symbol is also globally visible — so that theexternblock can find it — which can cause symbol resolution conflicts. A naked function's name does participate in name mangling and won't run into these issues.A further limitation that this example does not show is that functions defined using global assembly cannot use generics. Especially const generics are useful in combination with assembly.

Finally, having just one definition provides a consistent place for (safety) documentation and attributes, with less risk of them getting out of date. Proper safety comments are essential for naked functions. The

nakedattribute is unsafe because the ABI (sysv64in our example), the signature, and the implementation have to be consistent.[](https://blog.rust-lang.org/2025/07/03/stabilizing-naked-functions/#how-

did-we-get-here) How did we get here?

Naked functions have been in the works for a long time.

The original RFC for naked functions is from 2015. That RFC was superseded by RFC 2972 in 2020. Inline assembly in Rust had changed substantially at that point, and the new RFC limited the body of naked functions to a single

asm!call with some additional constraints. And now, 10 years after the initial proposal, naked functions are stable.Two additional notable changes helped prepare naked functions for stabilization:

[](https://blog.rust-lang.org/2025/07/03/stabilizing-naked-

functions/#introduction-of-the-naked-asm-macro) Introduction of the

naked_asm!macroThe body of a naked function must be a single

naked_asm!call. This macro is a blend betweenasm!(it is in a function body) andglobal_asm!(only some operand types are accepted).The initial implementation of RFC 2972 added lints onto a standard

asm!call in a naked function. This approach made it hard to write clear error messages and documentation. With the dedicatednaked_asm!macro the behavior is much easier to specify.[](https://blog.rust-lang.org/2025/07/03/stabilizing-naked-

functions/#lowering-to-global-asm) Lowering to

global_asm!The initial implementation relied on LLVM to lower functions with the

nakedattribute for code generation. This approach had two issues:- LLVM would sometimes add unexpected additional instructions to what the user wrote.

- Rust has non-LLVM code generation backends now, and they would have had to implement LLVM's (unspecified!) behavior.

The implementation that is stabilized now instead converts the naked function into a piece of global assembly. The code generation backends can already emit global assembly, and this strategy guarantees that the whole body of the function is just the instructions that the user wrote.

[](https://blog.rust-lang.org/2025/07/03/stabilizing-naked-functions/#what-

s-next-for-assembly) What's next for assembly?

We're working on further assembly ergonomics improvements. If naked functions are something you are excited about and (may) use, we'd appreciate you testing these new features and providing feedback on their designs.

[](https://blog.rust-lang.org/2025/07/03/stabilizing-naked-

functions/#extern-custom-functions)

extern "custom"functionsNaked functions usually get the

extern "C"calling convention. But often that calling convention is a lie. In many cases, naked functions don't implement an ABI that Rust knows about. Instead they use some custom calling convention that is specific to that function.The

abi_customfeature addsextern "custom"functions and blocks, which allows us to correctly write code like this example from compiler-builtins:#![feature(abi_custom)] /// Division and modulo of two numbers using Arm's nonstandard ABI. /// /// ```c /// typedef struct { int quot; int rem; } idiv_return; /// __value_in_regs idiv_return __aeabi_idivmod(int num, int denom); /// ``` // SAFETY: The assembly implements the expected ABI, and "custom" // ensures this function cannot be called directly. #[unsafe(naked)] pub unsafe extern "custom" fn __aeabi_idivmod() { core::arch::naked_asm!( "push {{r0, r1, r4, lr}}", // Back up clobbers. "bl {trampoline}", // Call an `extern "C"` function for a / b. "pop {{r1, r2}}", "muls r2, r2, r0", // Perform the modulo. "subs r1, r1, r2", "pop {{r4, pc}}", // Restore clobbers, implicit return by setting `pc`. trampoline = sym crate::arm::__aeabi_idiv, ); }A consequence of using a custom calling convention is that such functions cannot be called using a Rust call expression; the compiler simply does not know how to generate correct code for such a call. Instead the compiler will error when the program does try to call an

extern "custom"function, and the only way to execute the function is using inline assembly.[](https://blog.rust-lang.org/2025/07/03/stabilizing-naked-

functions/#cfg-on-lines-of-inline-assembly)

cfgon lines of inline assemblyThe

cfg_asmfeature adds the ability to annotate individual lines of an assembly block with#[cfg(...)]or#[cfg_attr(..., ...)]. Configuring specific sections of assembly is useful to make assembly depend on, for instance, the target, target features, or feature flags. For example:#![feature(cfg_asm)] global_asm!( // ... // If enabled, initialise the SP. This is normally // initialised by the CPU itself or by a bootloader, but // some debuggers fail to set it when resetting the // target, leading to stack corruptions. #[cfg(feature = "set-sp")] "ldr r0, =_stack_start msr msp, r0", // ... )This example is from the cortex-m crate that currently has to use a custom macro that duplicates the whole assembly block for every use of

#[cfg(...)]. Withcfg_asm, that will no longer be necessary. -

🔗 Console.dev newsletter tigrisfs rss

Description: Object storage as a filesystem.

What we like: Mounts Tigris object storage buckets as a local filesystem. Includes optimizations for small files. POSIX-compatible so you get permissions, symlinks, etc. Smart caching and prefetching of directories and metadata to improve performance.

What we dislike: Some operations will be slow if uncached - remember that object storage is not block storage.

-

🔗 Console.dev newsletter Kingfisher rss

Description: Secret scanning & validation.

What we like: Uses hardware accelerated regex parsing combined with live validation to find secrets. Optimized with language aware parsing to check regex matches against real patterns. Can scan locally and remotely across Git histories. Includes many pattern detections out of the box. Supports writing custom rules and validators.

What we dislike: No Linux packages (available as a small (~14Mb) compiled Rust binary). Hardware acceleration is based on Intel features.

-

- July 02, 2025

-

🔗 amantus-ai/vibetunnel VibeTunnel 1.0.0-beta.6 release

Changelog

[1.0.0-beta.6] - 2025-07-03

✨ New Features

Git Repository Monitoring 🆕

- Real-time Git Status - Session rows now display git information including branch name and change counts

- Visual Indicators - Color-coded status: orange for branches, yellow for uncommitted changes

- Quick Navigation - Click folder icons to open repositories in Finder

- GitHub Integration - Context menu option to open repositories directly on GitHub

- Smart Caching - 5-second cache prevents excessive git commands while keeping info fresh

- Repository Detection - Automatically finds git repositories in parent directories

Enhanced Command-Line Tool

- Terminal Title Management -

vt titlecan set the title of your Terminal. Even Claude can use it! - Version Information -

vt helpnow displays binary path, version, build date, and platform information for easier troubleshooting - Apple Silicon Support - Automatic detection of Homebrew installations on ARM Macs (/opt/homebrew path)

Menu Bar Enhancements

- Rich Session Interface - Powerful new menu bar with visual activity indicators and real-time status tracking

- Native Session Overview - See all open terminal sessions and even Claude Code status right from the menu bar.

- Sleep Prevention - Mac stays awake when running terminal sessions

Web Interface Improvements

- Modern Visual Design - Complete UI overhaul with improved color scheme, animations, and visual hierarchy

- Collapsible Sidebar - New toggle button to maximize terminal viewing space (preference is remembered)

- Better Session Loading - Fixed race conditions that caused sessions to appear as "missing"

- Responsive Enhancements - Improved adaptation to different screen sizes with better touch targets

🚀 Performance & Stability

Terminal Output Reliability

- Fixed Output Corruption - Resolved race conditions causing out-of-order or corrupted terminal output

- Stable Title Updates - Terminal titles now update smoothly without flickering or getting stuck

Server Improvements

- Logger Fix - Fixed double initialization that was deleting log files

- Better Resource Cleanup - Improved PTY manager cleanup and timer management

- Enhanced Error Handling - More robust error handling throughout the server stack

Simplified Tailscale Setup

- Switched to Tailscale's local API for easier configuration

- Removed manual token management requirements

- Streamlined connection UI for minimal setup

[1.0.0-beta.5] - 2025-01-29

🎨 UI Improvements

- Version Display - Web interface now shows full version including beta suffix (e.g., v1.0.0-beta.5)

- Build Filtering - Cleaner build output by filtering non-actionable Xcode warnings

- Mobile Scrolling - Fixed scrolling issues on mobile web browsers

🔧 Infrastructure

- Single Source of Truth - Web version now automatically reads from package.json at build time

- Version Sync Validation - Build process validates version consistency between macOS and web

- CI Optimization - Tests only run when relevant files change (iOS/Mac/Web)

- E2E Test Suite - Comprehensive Playwright tests for web frontend reliability

🐛 Bug Fixes

- No-Auth Mode - Fixed authentication-related error messages when running with

--no-auth - Log Streaming - Fixed frontend log streaming in no-auth mode

- Test Reliability - Resolved flaky tests and improved test infrastructure

📝 Developer Experience

- Release Documentation - Enhanced release process documentation with version sync requirements

- Test Improvements - Better test fixtures, helpers, and debugging capabilities

- Error Suppression - Cleaner logs when running in development mode

[1.0.0-beta.4] - 2025-06-25

- We replaced HTTP Basic auth with System Login or SSH Keys for better security.

- Sessions now show exited terminals by default - no more hunting for terminated sessions

- Reorganized sidebar with cleaner, more compact header and better button placement

- Added user menu in sidebar for quick access to settings and logout

- Enhanced responsive design with better adaptation to different screen sizes

- Improved touch targets and spacing for mobile users

- Leverages View Transitions API for smoother animations with CSS fallbacks

- More intuitive default settings for better out-of-box experience

[1.0.0-beta.3] - 2025-06-23

There's too much to list! This is the version you've been waiting for.

- Redesigned, responsive, animated frontend.

- Improved terminal width spanning and layout optimization

- File-Picker to see files on-the-go.

- Creating new Terminals is now much more reliable.

- Added terminal font size adjustment in the settings dropdown

- Fresh new icon for Progressive Web App installations

- Refined bounce animations for a more subtle, professional feel

- Added retro CRT-style phosphor decay visual effect for closed terminals

- Fixed buffer aggregator message handling for smoother terminal updates

- Better support for shell aliases and improved debug logging

- Enhanced Unix socket server implementation for faster local communication

- Special handling for Warp terminal with custom enter key behavior

- New dock menu with quick actions when right-clicking the app icon

- More resilient vt command-line tool with better error handling

- Ensured vibetunnel server properly terminates when Mac app is killed

[1.0.0-beta.2] - 2025-06-19

🎨 Improvements

- Redesigned slick new web frontend

- Faster terminal rendering in the web frontend

- New Sessions spawn new Terminal windows. (This needs Applescript and Accessibility permissions)

- Enhanced font handling with system font priority

- Better async operations in PTY service for improved performance

- Improved window activation when showing the welcome and settings windows

- Preparations for Linux support

🐛 Bug Fixes

- Fixed window front order when dock icon is hidden

- Fixed PTY service enhancements with proper async operations

- Fixed race condition in session creation that caused frontend to open previous session

[1.0.0-beta.1] - 2025-06-17

🎉 First Public Beta Release

This is the first public beta release of VibeTunnel, ready for testing by early adopters.

✨ What's Included

- Complete terminal session proxying to web browsers

- Support for multiple concurrent sessions

- Real-time terminal rendering with full TTY support

- Secure password-protected dashboard

- Tailscale and ngrok integration for remote access

- Automatic updates via Sparkle framework

- Native macOS menu bar application

🐛 Bug Fixes Since Internal Testing

- Fixed visible circle spacer in menu (now uses Color.clear)

- Removed development files from app bundle

- Enhanced build process with automatic cleanup

- Fixed Sparkle API compatibility for v2.7.0

📝 Notes

- This is a beta release - please report any issues on GitHub

- Auto-update functionality is fully enabled

- All core features are stable and ready for daily use

✨ What's New Since Internal Testing

- Improved stability and performance

- Enhanced error handling for edge cases

- Refined UI/UX based on internal feedback

- Better session cleanup and resource management

- Optimized for macOS Sonoma and Sequoia

🐛 Known Issues

- Occasional connection drops with certain terminal applications

- Performance optimization needed for very long sessions

- Some terminal escape sequences may not render perfectly

📝 Notes

- This is a beta release - please report any issues on GitHub

- Auto-update functionality is fully enabled

- All core features are stable and ready for daily use

[1.0.0] - 2025-06-16

🎉 Initial Release

VibeTunnel is a native macOS application that proxies terminal sessions to web browsers, allowing you to monitor and control terminals from any device.

✨ Core Features

Terminal Management

- Terminal Session Proxying - Run any command with

vtprefix to make it accessible via web browser - Multiple Concurrent Sessions - Support for multiple terminal sessions running simultaneously

- Session Recording - All sessions automatically recorded in asciinema format for later playback

- Full TTY Support - Proper handling of terminal control sequences, colors, and special characters

- Interactive Commands - Support for interactive applications like vim, htop, and more

- Shell Integration - Direct shell access with

vt --shellorvt -i

Web Interface

- Browser-Based Dashboard - Access all terminal sessions at http://localhost:4020

- Real-time Terminal Rendering - Live terminal output using asciinema player

- WebSocket Streaming - Low-latency real-time updates for terminal I/O

- Mobile Responsive - Fully functional on phones, tablets, and desktop browsers

- Session Management UI - Create, view, kill, and manage sessions from the web interface

Security & Access Control

- Password Protection - Optional password authentication for dashboard access

- Keychain Integration - Secure password storage using macOS Keychain

- Access Modes - Choose between localhost-only, network, or secure tunneling

- Basic Authentication - HTTP Basic Auth support for network access

Remote Access Options

- Tailscale Integration - Access VibeTunnel through your Tailscale network

- ngrok Support - Built-in ngrok tunneling for public access with authentication

- Network Mode - Local network access with IP-based connections

macOS Integration

- Menu Bar Application - Lives in the system menu bar with optional dock mode

- Launch at Login - Automatic startup with macOS

- Auto Updates - Sparkle framework integration for seamless updates

- Native Swift/SwiftUI - Built with modern macOS technologies

- Universal Binary - Native support for both Intel and Apple Silicon Macs

CLI Tool (

vt)- Command Wrapper - Prefix any command with

vtto tunnel it - Claude Integration - Special support for AI assistants with

vt --claudeandvt --claude-yolo - Direct Execution - Bypass shell with

vt -Sfor direct command execution - Automatic Installation - CLI tool automatically installed to /usr/local/bin

Server Implementation

- Dual Server Architecture - Choose between Rust (default) or Swift server backends

- High Performance - Rust server for efficient TTY forwarding and process management

- RESTful APIs - Clean API design for session management

- Health Monitoring - Built-in health check endpoints

Developer Features

- Server Console - Debug view showing server logs and diagnostics

- Configurable Ports - Change server port from default 4020

- Session Cleanup - Automatic cleanup of stale sessions on startup

- Comprehensive Logging - Detailed logs for debugging

🛠️ Technical Details

- Minimum macOS Version : 14.0 (Sonoma)

- Architecture : Universal Binary (Intel + Apple Silicon)

- Languages : Swift 6.0, Rust, TypeScript

- UI Framework : SwiftUI

- Web Technologies : TypeScript, Tailwind CSS, WebSockets

- Build System : Xcode, Swift Package Manager, Cargo, npm

📦 Installation

- Download DMG from GitHub releases

- Drag VibeTunnel to Applications folder

- Launch from Applications or Spotlight

- CLI tool (

vt) automatically installed on first launch

🚀 Quick Start

# Monitor AI agents vt claude # Run development servers vt npm run dev # Watch long-running processes vt python train_model.py # Open interactive shell vt --shell👥 Contributors

Created by:

- @badlogic - Mario Zechner

- @mitsuhiko - Armin Ronacher

- @steipete - Peter Steinberger

📄 License

VibeTunnel is open source software licensed under the MIT License.

Version History

Pre-release Development

The project went through extensive development before the 1.0.0 release, including:

- Initial TTY forwarding implementation using Rust

- macOS app foundation with SwiftUI

- Integration of asciinema format for session recording

- Web frontend development with real-time terminal rendering

- Hummingbird HTTP server implementation

- ngrok integration for secure tunneling

- Sparkle framework integration for auto-updates

- Comprehensive testing and bug fixes

- UI/UX refinements and mobile optimizations

-

🔗 r/reverseengineering Anubi: Open-Source Malware Sandbox Automation Framework with CTI Integration rss

Hello everyone!

Over the past months, I've been working on Anubi , an open-source automation engine that extends the power of Cuckoo sandbox with Threat Intelligence capabilities and custom analysis logic.

Its key features are: - Automates static/dynamic analysis of suspicious files (EXE, DLL, PDF…) - Enriches Cuckoo results with external threat intelligence feeds - Integrates custom logic for IOC extraction, YARA scanning, score aggregation - JSON outputs, webhook support, modular design

Anubi is designed for analysts, threat hunters and SOCs looking to streamline malware analysis pipelines. It’s written in Python and works as a standalone backend engine (or can be chained with other tools like MISP or Cortex).

It is full open-source: https://github.com/kavat/anubi

Would love feedback, suggestions or contributors.

Feel free to star ⭐ the project if you find it useful!submitted by /u/kavat87

[link] [comments] -

🔗 jj-vcs/jj v0.31.0 release

About

jj is a Git-compatible version control system that is both simple and powerful. See

the installation instructions to get started.Breaking changes

-

Revset expressions like

hidden_id | description(x)now search the specified

hidden revision and its ancestors as well

as all visible revisions. -

Commit templates no longer normalize

descriptionby appending final newline

character. Usedescription.trim_end() ++ "\n"if needed.

Deprecations

- The

git.push-bookmark-prefixsetting is deprecated in favor of

templates.git_push_bookmark, which supports templating. The old setting can

be expressed in template as"<prefix>" ++ change_id.short().

New features

-

New

change_id(prefix)/commit_id(prefix)revset functions to explicitly

query commits by change/commit ID prefix. -

The

parents()andchildren()revset functions now accept an optional

depthargument. For instance,parents(x, 3)is equivalent tox---, and

children(x, 3)is equivalent tox+++. -

jj evologcan now follow changes from multiple revisions such as divergent

revisions. -

jj diffnow accepts-T/--templateoption to customize summary output. -

Log node templates are now specified in toml rather than hardcoded.

-

Templates now support

json(x)function to serialize values in JSON format. -

The ANSI 256-color palette can be used when configuring colors. For example,

colors."diff removed token" = { bg = "ansi-color-52", underline = false }

will apply a dark red background on removed words in diffs.

Fixed bugs

-

jj file annotatecan now process files at a hidden revision. -

jj op log --op-diffno longer fails at displaying "reconcile divergent

operations." #4465 -

jj util gc --expire=nownow passes the corresponding flag togit gc. -

change_id/commit_id.shortest()template functions now take conflicting

bookmark and tag names into account.

#2416 -

Fixed lockfile issue on stale file handles observed with NFS.

Packaging changes

aarch64-windowsbuilds (release binaries andmainsnapshots) are now provided.

Contributors

Thanks to the people who made this release happen!

- Anton Älgmyr (@algmyr)

- Austin Seipp (@thoughtpolice)

- Benjamin Brittain (@benbrittain)

- Cyril Plisko (@imp)

- Daniel Luz (@mernen)

- Gaëtan Lehmann (@glehmann)

- Gilad Woloch (@giladwo)

- Greg Morenz (@gmorenz)

- Igor Velkov (@iav)

- Ilya Grigoriev (@ilyagr)

- Jade Lovelace (@lf-)

- Jonas Greitemann (@jgreitemann)

- Josh Steadmon (@steadmon)

- juemrami (@juemrami)

- Kaiyi Li (@06393993)

- Lars Francke (@lfrancke)

- Martin von Zweigbergk (@martinvonz)

- Osama Qarem (@osamaqarem)

- Philip Metzger (@PhilipMetzger)

- raylu (@raylu)

- Scott Taylor (@scott2000)

- Vincent Ging Ho Yim (@cenviity)

- Yuya Nishihara (@yuja)

-

-

🔗 Luke Muehlhauser Media diet for Q2 2025 rss

Music

Music I most enjoyed discovering this quarter:1

- DJ Koze: Music Can Hear Us (2025)

- Wayne Horvitz: Those Who Remain mvt 1 (2018), "A Walk in the Rain" & "Barber Shop" (2014)

- George Russell: "Time Spiral" and "Ezz-thetic" (1983)

- Aaron Parks: Invisible Cinema (2008)

- Spiro Dussias: "Negative" (2024)

- Vijay Iyer: Far from Over (2017)

- Christian McBride: Live at Tonic (2006)

- McCoy Tyner: The Turning Point (1992)

- Chris Potter: Follow the Red Line (2007)

- Eskaton: Musique post-atomique (1979)

- Bob Brookmeyer: "Make Me Smile" (1982)

- Levi McClain: Etch a Sketch (2018)

- Graeme Shields & others: Pocket Grooves (2019)

- Adrián Berenguer: "Overture" (2025)

- Matyáš Novák: "Vltava" (2021)

- Carla Bley: Fleur Carnivore (1989)

- Too Many Zooz: "Lose Control" (2024), "Severance Theme" (2025)

- Aaron Hibell & Dan Heath: "Odyssey" (2025)

- Natural Information Society & Bitchin' Bajas: "Clock no Clock" (2025)

- Josef Gung'l: "Narren Galopp" (c. 1855)

- Eduard Strauss: "Bahn Frei!" (1869)

- Trombone Shorty: "Hurricane Season" & "Neph" (2010)

- Andrew Wrangell: "Rush E" (2018)

- Darcy James Argue: "Best Friends Forever" (2016)

- Woody Shaw: The Moontrane (1975)

- Gustavo Santaolalla: "Babel"

- Saagara: 3 (2024)

- New Age Doom / Tuvaband: There Is No End (2023)

- KALI Trio: "Transitoriness" (2021)

- Avishai Cohen: Continuo (2006)

- Jacques Loussier: Plays Bach, the 50th Anniversary Recording (2009)

- Bent Knee: Twenty Pills Without Water (2024)

- Soap&Skin: Untitled (2008), "Sugarbread" (2013)

- Danny Elfman: Back to School (1986), Darkman (1990), Serenada Schizophrana (2006), Rabbit & Rogue (2008), Iris (2011), Eleven Eleven (2017), Percussion Quartet (2019), Percussion Concerto (2022), Wunderkammer (2022), Cello Concerto (2024)

- Christopher Tin: Calling All Dawns (2009), The Drop That Contained the Sea (2014), To Shiver the Sky (2020)

- Kosaku Yamada [playlist]: Symphony in F Major mvt 4 (1912), "Sunayama" (1923)

- L. Subramaniam [playlist]: "Rainbow Serenade" & "Kali Dance" (1981), "Garland" (1982), Double Concerto for Violin and Flute in E Major (1983), "Flight of the Humble Bee" (1984), Fantasy on Vedic Chants (1985), Spring Rhapsody (1986), Turbulence Concerto mvts 1 & 3 (1987), Shanti Priya mvts 1 & 3 (1988), Freedom Symphony (2007), "Tribute to Bach" (2015), Isabella Violin Concerto (2016), Bharat Symphony (2017), Mahatma Symphony (2022)

- Ludovico Einaudi [playlist]: "The Apple Tree" (1995), Doctor Zhivago (2002), "Nuvole Bianche" & "Dietro Casa" (2004), "Primavera" & "Divenire" (2006), "Lady Labyrinth" & "Nightbook" (2009), Intouchables (2011), "Wetlands" (2012), "Experience" & "Life" & "Run" (2013), "Night" (2015), Nomadland (2021)

- MONACA Inc. [playlist]: several tracks from NieR Gestalt & RepliCant (2010)

- Miles Davis: 'Four' & More (1966)

- Terence Blanchard [playlist]: "Hannibal" & "Kaos" & "Unchanged" & "Soldiers" (2018)

- Kurt Rosenwinkel [playlist]: "Chords" & "Flute" (2008)

- Sonny Rollins: G-Man (1987)

- Heiner Goebbels: "Ballade vom zerrissenen Rock" (1998)

- Annie Gosfield: "Impulse Turbine: Inlet, Exhaust-cumbustion Chamber" (1999)

- Daniel Bernard Roumain: Voodoo Violin Concerto (2002)

- Matthew Hindson: Speed (1997)

- Mike Mills: Concerto for Violin, Rock Band, and String Orchestra (2016)

- Cannonball Adderley: Mercy, Mercy, Mercy (1967)

- Dexter Gordon: Homecoming (1977)

- VSOP the Quintet: Live Under the Sky (1979)

- Lubomyr Melnyk: Corollaries (2013)

Rediscovered or revisited, and really liked:2

- Arcangelo Corelli: "La folia" (1700)

- Wayne Horvitz: "Prodigal Sun Revisited" (1990)

- Claudio Monteverdi: "Zefiro torna e di soavi accenti" (1632)

- George Frederic Handel: Suite in D Minor [HWV 437] (1706)

- Saint-Saens: "Havanaise" (1887), Piano Concerto No. 5 mvt 3 (1896)

- Beethoven: Piano Sonata No. 23 "Appassionata" mvts. 1 & 3 (1806), Piano Concerto No. 5 "Emperor" (1809)

- Mozart: Piano Concerto No. 20 mvt. 3 (1785), Piano Concerto No. 21 mvt. 2 (1785), Piano Sonata No. 16 mvt. 1 (1788)

- Karl Jenkins: Adiemus: Songs of Sanctuary (1995), Adiemus II: Cantata Mundi (1996), Adiemus III: Dances of Time (1998), Adiemus IV: The Eternal Knot (2001), Adiemus V: Vocalise (2004), "Canción Amarilla" (2013), Symphonic Adiemus (2017)

- Sons of Kemet: Burn (2013), Lest We Forget What We Came Here to Do (2015)

- Metallica: S &M (1999)

- Frank Zappa: King Kong (1969), "Strictly Genteel" (1971)

- Soap&Skin: Lovetune for Vacuum (2009), Narrow (2012)

- Robert Rodriguez: "Sin City" & "Sin City End Titles" (2005)

- Philip Glass: Powaqqatsi (1988), "It Was Always You, Helen" (1992), "Escape to India" (2003) "Intensive Time" (2002), "100,000 People" & "Target Destruction" (2003), "Miller's Theme" (2003)

- Kamasi Washington [playlist]: "The Conception" & "The Bombshell's Waltz" & "Bobby Boom Bap" (2007), "Change of the Guard" & "Askim" & "Miss Understanding" & "Leroy and Lanisha" & "The Magnificent 7" & "Malcolm's Theme" & "The Message" (2015), Harmony of Difference (2017), "The Secret of Jinison" & "Fists of Fury" & "Hub-Tones" & "One of One" & "Vi Lua Vi Sol" & "Show Us the Way" & "Will You Sing" (2018), "Lesanu" & "The Garden Path" & "Prologue" (2024), Lazarus (2025)

- Wim Mertens [playlist]: "Birds for the Mind" & "Struggle for Pleasure" & "4 Mains" (1987), "No Testament" & "The Whole" (1989), "Iris" & "Far" (1991), "Their Duet" & "His Own Thing" & "Watch Over Me" & "Shot One" (1993), Jardin Clos (1996), Skopos (2003), Partes Extra Partes (2006), Receptacle (2007), Zee Versus Zed (2010)

- Ill Considered: Ill Considered (2017), "Delusion" (2018), "Incandescent Rage" & "Single Leaf" (2019), Liminal Space (2021), Precipice (2024)

- Rollerball: Ahura (2008), Murwa Mbwa (2011)

- Fire! [playlist]: "Can I Hold You for a Minute?" & "You Liked Me Five Minutes Ago" (2009), "And the Stories Will Flood Your Satisfaction" & "He Wants to Sleep in a Dream" (2012), "Exit! Pt. 1" (2013), "Enter Part Four" (2014), "Ritual, Pts 1-2" (2016), "The Hands" & "Washing Your Heart in Filth" (2018), "Weekends" & "Blue Crystal Fire" (2019), "A lost farewell" (2023)

- Anna von Hausswolff [playlist]: "Lost at Sea" (2010), Ceremony (2014), "Pomperipossa" (2015), "The Mysterious Vanishing of Electra" & "Ugly and Vengeful" (2018)

- Howard Shore [playlist]: "A. Hauser and O'Brien B. Bugpowder" (1991), "The Shire" & "Bag End" & "The Council of Elrond Assembles" & "The Road Goes Ever On…" (2001), "The Three Hunters" (2002), "The Battle of Pelennor Fields" & "The Lighting of the Beacons" & "For Frodo" (2003), "Believe" (2010)

I also listened to a significant portion of the works by each of the composers listed below.3 My favorites pieces from them were (names linked to playlists):

- Franz Liszt (b. 1811): Schubert Marches Nos. 3 & 4 (1846), Totentanz (1849), "Un sospiro" (1849), Liebesträume No. 3 (1850), Paganini Studies Nos. 3 & 4 (1851), Hungarian Rhapsody No. 2 (1851), Transcendental Études No. 4 (1852), Piano Concerto No. 2 mvt 2 (1861)

- Hector Berlioz (b. 1803): "Marche hongroise" (1845)

- Gustav Holst (b. 1874): The Planets (1917)

- Modest Mussorgsky (b. 1839): Night on Bald Mountain (1867), "Varlaam's Drinking Song" (1873), Pictures at an Exhibition (1874)

- Giuseppe Verdi (b. 1813): "La donna è mobile" (1851), "Libiamo ne' lieti calici" (1853), Messa da Requiem mvts. 3 & 19 (1874)

- Bedrich Smetana (b. 1824): Triumphal Symphony (1853), The Bartered Bride (1866), Ma vlast (1879)

- Gioachino Rossini (b. 1792): The Italian Girl in Algiers "Overture" (1813), The Barber of Seville "Overture" and "Largo al factotum" (1816), William Tell "Overture (Finale)" (1829)

- Cesar Franck (b. 1822): [none]

- Carl Maria von Weber (b. 1786): [none]

- Jean-Philippe Rameau (b. 1683): "Les cyclopes" (1724), "Les Sauvages" (1725), Platée act 1 scene 6's air & orage (1745), Zais overture (1748)

- Charles-Valentin Alkan (b. 1813): Etude [from Encyclopédie Du Pianiste Compositeur] (1840), Piano Trio No. 1 mvt 4 (1841), Symphony for Solo Piano mvt 1 (1857)

- Giuseppe Torelli (b. 1658): [none]

- Georg Philipp Telemann (b. 1681): [none]

- Edouard Lalo (b. 1823): Fantasie Originale mvt 1 (1848), Divertissement mvt 4 (1872), Norwegian Fantasy for Violin and Orchestra mvts 1 & 3 (1878), Norwegian Fantasy (1878), Romance-Sérénade (1879), Fantasy-Ballet for Violin and Orchestra (1885), Symphony in G Minor mvts 1 & 4 (1886), Piano Concerto in F Minor (1889)

- Louis Spohr (b. 1784): String Quartet No. 3 mvt 1 (1806)

- Isaac Albeniz (b. 1860): Iberia mvts 1 & 2 (arr. for orch.) (1908), Suite española (arr. for orch.) (1889), "Asturias (Leyenda)" (arr. for guitar) (1889), "Tango" (arr. for orch.) (1890)

- Charles Gounod (b. 1818): "Funeral March of a Marionette" (1872)

- Henryk Wieniawski (b. 1835): Adagio elegiaque (1852), Capriccio-Valse (1852), Violin Concerto No. 1 mvt 3 (1853), Variations on an Original Theme (1854), 8 Etudes-Caprices No. 4 (1862), "Scherzo Tarantelle" (1885)

- Max Bruch (b. 1838): Violin Conerto No. 1 mvt 3 (1867), Symphony No. 1 mvt 3 (1868), Scottish Fantasy for Violin and Orchestra (1880), Violin Concerto No. 3 mvt 3 (1891), 8 Pieces Nos. 2 & 7 (1910)

- Henri Duparc (b. 1848): [none]

- Ethel Smyth (b. 1858): [none]

- C.P.E. Bach (b. 1714): Symphony in E Minor [Wq 178] mvt 1 (1756), Symphony in E-Flat Major [Wq 179] mvt 1 (c. 1757), "Solfeggio" [H 220] (c. 1766)

- Anton Bruckner (b. 1824): [none]

- Alexander Borodin (b. 1833): String Sextet in D Minor mvt 1 (1861), Piano Quintet in C Minor (1862), Symphony No. 2 mvt 4 (1876), "Requiem" (1878), Petite Suite (1885), Prince Igor "Overture" (1887)

- Alexander Glazunov (b. 1865): Symphony No. 1 (1884), "Slavonic Feast" (1888), Oriental Rhapsody mvts 2 & 5 (1889), Chopiniana mvts 1 & 4 (1892), Symphony No. 4 (1893), Symphony No. 5 (1895), The Seasons (1900), "Grand Waltz" and "Little Peasant Dance" (1900)

- Edward MacDowell (b. 1860): Piano Concerto No. 1 mvts 1 & 3 (1885), Piano Concerto No. 2 (1890), Woodland Sketches (1896)

- Vincent d'Indy (b. 1851): Symphony No. 1 mvt 1 (1872)

- Niels Gade (b. 1817): Symphony No. 5 (1852)

- Joachim Raff (b. 1822): String Sextet in G Minor mvt 2 (1872), Orchestral Suite No. 2 (1874), Symphony No. 9 mvt 4 (1878)

- Karl Goldmark (b. 1830): [none]

- Henri Vieuxtemps (b. 1820): Violin Concerto No. 3 mvt 3 (1844), Violin Concerto No. 4 mvts 2-4 (1850)

- Giuseppe Martucci (b. 1856): none

- Edward Elgar (b. 1857): Enigma Variations (1899), Pomp and Circumstance Marches (1901-1907 & 1930), Cello Concerto (1919)

- Mikhail Glinka (b. 1804): Spanish Overture No. 1 (1845), Spanish Overture No. 2 (1851)

- Ernst von Dohnányi (b. 1877): Ruralia Hungarica [orch.] mvts 2 & 5 (1924)

As a reminder, when I don't list any works by a given composer, that just means I didn't "love" or "strongly like" any of their works that I listened to, even if I "liked" a lot of them. And liking or disliking a piece is different from thinking it's good/bad aesthetically.

Movies/TV

Ones I "really liked" (no star), or "loved" (star):

- Soderbergh, Black Bag (2025)

- Ho, Mickey 17 (2025)

- Linklater, Hit Man (2023)

- Baker, Anora (2024) ★

- Various, Black Mirror , season 7 (2025)

- Various, The Righteous Gemstones , season 4 (2025) ★

- Hobkinson, Lover Stalker Killer (2024)

- Saulnier, Rebel Ridge (2024)

- Borgli, Dream Scenario (2023)

- Rogen & Goldberg, The Studio , season 1 (2025) ★

- Various, What We Do in the Shadows , season 1 (2019) ★

- Various, What We Do in the Shadows , season 2 (2020) ★

Games

All games I finished or decided to stop playing:

- ★ Cyberpunk 2077 and Phantom Liberty : A unique and highly ambitious game that, years after its release (with the 2.0 update and Phantom Liberty expansion), has finally come close to achieving its original ambitions. Great world-building, massive scope, open-ended exciting gameplay, frequently good storytelling, thought-provoking themes, and just lots of fun.

Books

I post book ratings and reviews to my Goodreads account instead of here.

- Here are ~all the works I listened to for some of the composers below: Yamada, Subramaniam, Einaudi.

- I think I hadn’t heard some of the classical pieces before, but I’m not sure which ones.

- For Liszt, I listened to this ~complete works playlist through S427 before switching to this highlights playlist. For Berlioz, I listened to this complete works playlist through H81b before switching to this highlights playlist. I listened only to highlights playlists (plus a smattering of other pieces) for Holst, Mussorgsky, Verdi, Smetana, Rossini, Franck (note the repeated pieces), Weber, Rameau, Alkan, Torelli, Telemann, Spohr, Albeniz, Gounod, Bruch, Duparc, Smyth, C.P.E. Bach (only through 6 Prussian Sonatas), Bruckner, Borodin, Glazunov, MacDowell, d'Indy, Gade, Raff, Goldmark, Vieuxtemps, Martucci, Elgar, Glinka, and Dohnányi. I listened to complete works playlists for Lalo and Wieniawski.

-

🔗 News Minimalist Microsoft's AI diagnoses complex cases 4 times better than doctors + 8 more stories rss

In the last 2 days ChatGPT read 57858 top news stories. After removing previously covered events, there are 9 articles with a significance score over 5.9.

[6.0] Microsoft's new AI system diagnoses complex cases 4 times better than doctors —zdnet.com(+8)

Microsoft's new AI, MAI-DxO, diagnosed complex medical cases from the New England Journal of Medicine with 85% accuracy, exceeding human physicians' performance by over four times.

MAI-DxO uses a "virtual panel of physicians" approach with LLMs like GPT and Llama. It evaluates symptoms, asks questions, suggests tests, and displays its reasoning.

The AI is configurable to consider cost limitations, unlike unaided AI which might order every possible test.

[5.5] WHO certified Suriname malaria-free, a first for Amazon region —who.int(+4)

Suriname has been certified malaria-free by the World Health Organization, becoming the first country in the Amazon region to achieve this status. This marks a significant public health accomplishment.

The certification follows nearly 70 years of effort, with the last locally transmitted case of malaria recorded in 2021. Suriname implemented widespread testing, treatment, and community engagement to eliminate the disease.

This achievement highlights the possibility of malaria elimination in challenging environments and contributes to the WHO's goal of eliminating malaria in the Americas by 2030.

Highly covered news with significance over 5.5

[6.4] Zuckerberg lures AI talent from OpenAI with huge offers — wired.com [$] (+47)

[6.2] US, Japan, India, Australia announce critical minerals initiative to counter China's dominance — dw.com (+86)

[6.0] Canada ships first LNG export cargo from Pacific coast — reuters.com [$] (+5)

[6.0] Amazon deploys its 1 millionth robot in a sign of more job automation — cnbc.com (+7)

[5.8] US Schools will directly pay college athletes starting July 1 — cbssports.com (+6) [the first sports news that passed the threshold in a long while!]

[5.7] Russia claims full control of Ukraine's Luhansk region — thehindu.com [$] (+14)

[5.8] Scientists discovered a microbe redefining the minimum for life — elcomercio.pe (Spanish) (+2)

Thanks for reading!

— Vadim

You can create your own personalized newsletter like this with premium.

-

🔗 r/reverseengineering Computer Organization& Architecture in Arabic rss

I posted the first article of CO&A in arabic language good luck ✊🏼

submitted by /u/muxmn

[link] [comments] -

🔗 @trailofbits@infosec.exchange DARPA's AI Cyber Challenge finals are underway. Seven autonomous AI systems mastodon

DARPA's AI Cyber Challenge finals are underway. Seven autonomous AI systems are competing to find and patch vulnerabilities in critical open-source programs like the Linux kernel, SQLite, and cURL that power our digital infrastructure.

Learn more: https://blog.trailofbits.com/2025/07/02/buckle-up-buttercup- aixccs-scored-round-is- underway/

-

🔗 r/reverseengineering Castlevania: Symphony of the Night decompilation project rss

submitted by /u/r_retrohacking_mod2

[link] [comments]

-